Votre voyage NVIDIA Blackwell commence ici

En cette période de transformation de l'IA, où les lois d'échelle en constante évolution continuent de repousser les limites des capacités des centres de données, nos dernières solutions NVIDIA Blackwell, développées en étroite collaboration avec NVIDIA, offrent des performances, une densité et une efficacité de calcul sans précédent grâce à une architecture de nouvelle génération refroidie par air et par liquide. Grâce à nos solutions AI Data Center Building Block facilement déployables, Supermicro votre partenaire de choix pour vous lancer dans l'aventure NVIDIA Blackwell, en vous fournissant des solutions durables et de pointe qui accélèrent les innovations en matière d'IA.

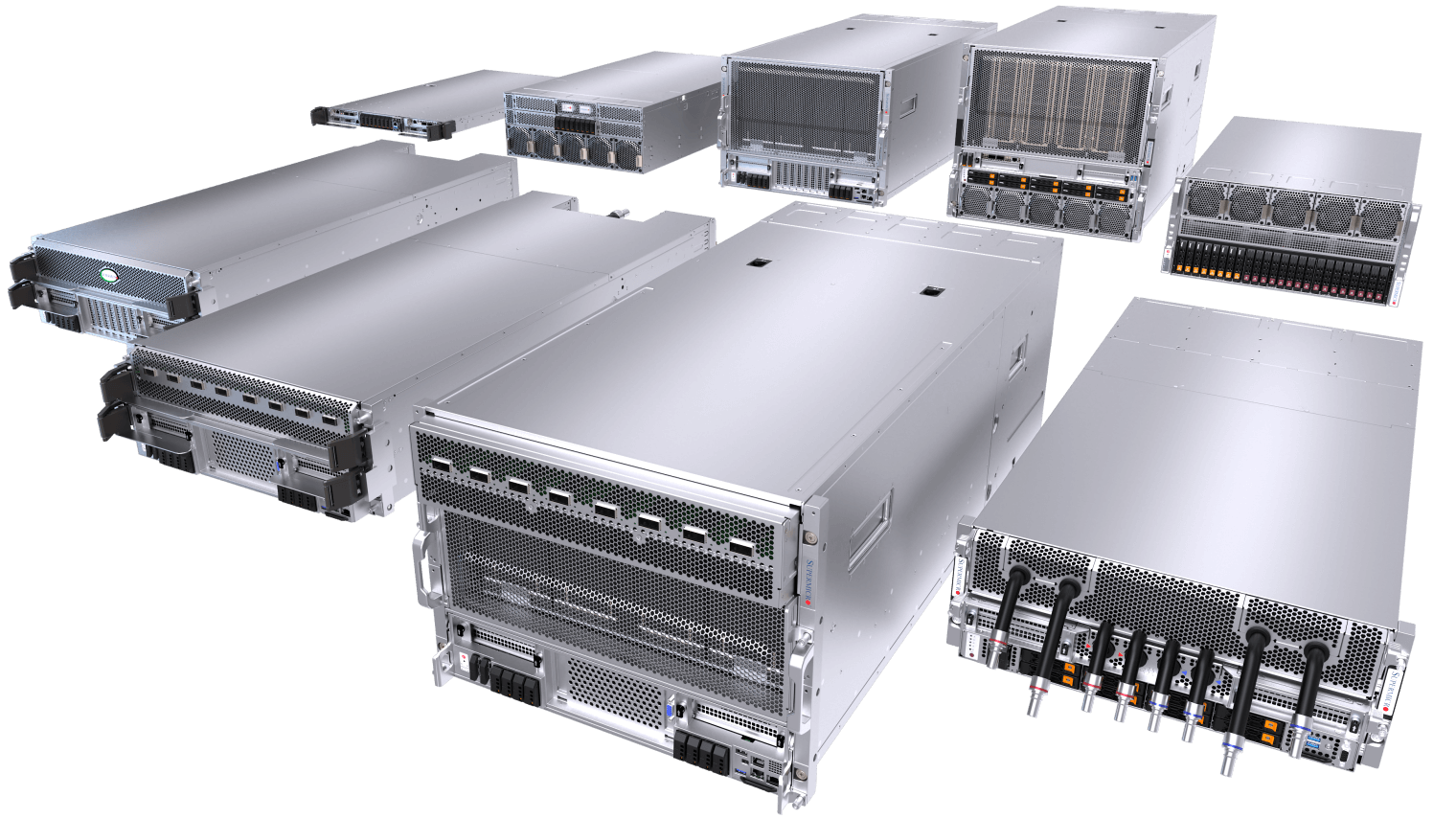

Avantage des solutions modulaires de bout en bout pour les centres de données d'IA

Une large gamme de systèmes refroidis par air et par liquide avec de multiples options de CPU, une suite logicielle complète de gestion de centre de données, une intégration clé en main au niveau du rack avec un réseau complet, un câblage et une validation L12 au niveau de la grappe, une livraison, un support et un service à l'échelle mondiale.

- Vaste expérience

- Les solutions modulaires pour centres de données IA Supermicroalimentent le plus grand déploiement de centres de données IA à refroidissement liquide au monde.

- Offres flexibles

- Refroidissement par air ou par liquide, optimisation des GPU, facteurs de forme multiples pour les systèmes et les racks, unités centrales, stockage, options de mise en réseau. Optimisé pour vos besoins.

- Pionnier du refroidissement par liquide

- Solutions de refroidissement liquide éprouvées, évolutives et prêtes à l'emploi pour soutenir la révolution de l'IA. Conçues spécifiquement pour l'architecture NVIDIA Blackwell.

- Délai de mise en ligne rapide

- Livraison accélérée avec une capacité mondiale, une expertise de déploiement de classe mondiale, des services sur un seul site, pour mettre votre IA en production, rapidement.

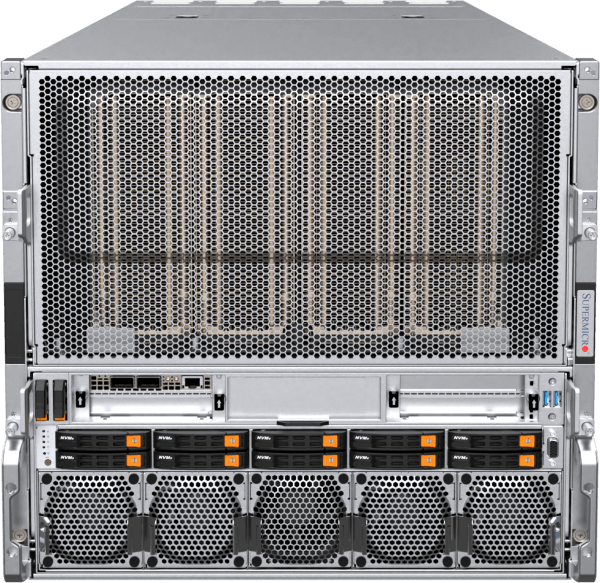

La plateforme d'IA hyperscale la plus compacte

Système NVIDIA HGX™ B300 optimisé pour la conception OCP ORV3 avec jusqu'à 144 GPU dans un rack

Le système NVIDIA HGX B300 à refroidissement liquide 2-OU Supermicrooffre une densité GPU inégalée pour les déploiements hyperscale. Conçu selon la spécification OCP ORV3 avec la technologie avancée DLC-2, chaque nœud compact à 8 GPU s'adapte à des racks de 21 pouces, permettant d'accueillir jusqu'à 18 nœuds et 144 GPU au total par rack. Doté de connexions à raccordement aveugle et d'une architecture modulaire de plateaux GPU/CPU, le système soutient chaque GPU B300 jusqu'à 1 100 W TDP tout en réduisant considérablement l'encombrement des racks, la consommation d'énergie et les coûts de refroidissement. Idéal pour les usines d'IA qui exigent une densité de performances maximale et une facilité d'entretien exceptionnelle.

288 Go de mémoire HBM3e par GPU

Système refroidi par liquide 2-OU

pour NVIDIA HGX B300 8-GPU

Jusqu'à 144 Ultra NVIDIA Blackwell Ultra dans un rack ORV3 standardisé

Ultra performance pour le raisonnement de l'IA

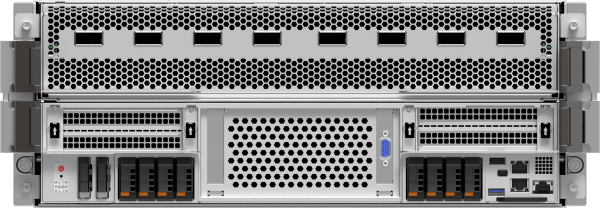

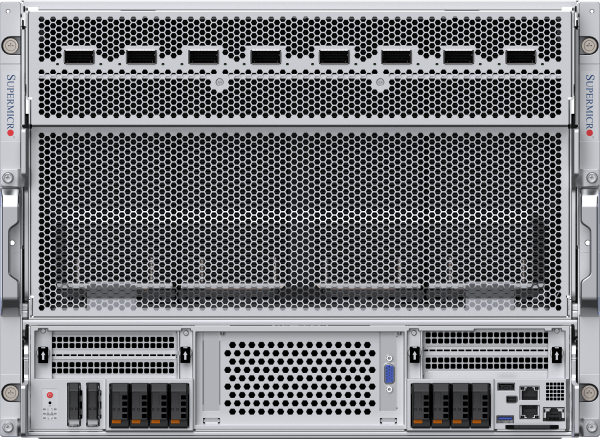

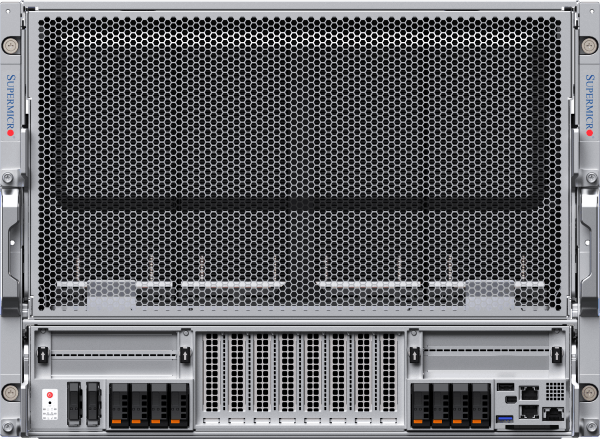

L'architecture la plus avancée refroidie par air et par liquide pour NVIDIA HGX™ B300

La plateforme Supermicro HGX alimente bon nombre des plus grands clusters IA au monde, fournissant la puissance de calcul nécessaire aux applications IA transformatrices d'aujourd'hui. Désormais équipé de NVIDIA Blackwell Ultra, le système 8U refroidi par air optimise les performances de huit GPU HGX B300 de 1 100 W avec une mémoire HBM3e totale de 2,3 To. Huit ports OSFP frontaux avec ConnectX®-8 SuperNIC intégrés à 800 Gb/s permettent le déploiement clé en main de clusters NVIDIA Quantum-X800 InfiniBand ou Spectrum-X™ Ethernet. Le système 4U à refroidissement liquide est doté de la technologie DLC-2 avec une capture de chaleur de 98 %, permettant de réaliser 40 % d'économies d'énergie dans les centres de données. Supermicro Center Building Block Solutions® (DCBBS) et l'expertise en matière de déploiement sur site fournissent des solutions complètes pour le refroidissement liquide, la topologie et le câblage du réseau, l'alimentation électrique et la gestion thermique afin d'accélérer la mise en service des usines d'IA.

Système 4U refroidi par liquide ou 8U refroidi par air

pour NVIDIA HGX B300 8-GPU

Système d'E/S avant refroidi par liquide avec réseau NVIDIA ConnectX-8 800 Gb/s intégré

Système refroidi par air à entrées/sorties frontales pour NVIDIA Blackwell Ultra HGX B300 8-GPU avec réseau NVIDIA ConnectX-8 intégré

Un Exascale de calcul dans un rack

Solution de refroidissement liquide de bout en bout pour NVIDIA GB300 NVL72

Le Supermicro GB300 NVL72 répond aux exigences informatiques de l'IA, de la formation de modèles fondamentaux à l'inférence de raisonnement à grande échelle. Il combine des performances IA élevées avec la technologie de refroidissement liquide direct Supermicro, permettant une densité et une efficacité informatiques maximales. Basé sur NVIDIA Blackwell Ultra, un seul rack intègre 72 GPU NVIDIA B300 avec 288 Go de mémoire HBM3e chacun. Avec des interconnexions NVLink de 1,8 To/s, le GB300 NVL72 fonctionne comme un supercalculateur exascale dans un seul nœud. La mise à niveau du réseau double les performances de la structure de calcul, prenant en charge des vitesses de 800 Gb/s. La capacité de fabrication et les services de bout en bout Supermicro accélèrent le déploiement des usines d'IA à refroidissement liquide et réduisent les délais de mise sur le marché des clusters GB300 NVL72.

NVIDIA GB300 NVL72 et GB200 NVL72

pour NVIDIA GB300/GB200 Grace™ Blackwell Superchip

72 GPU NVIDIA Blackwell Ultra dans un domaine NVIDIA NVLink. Désormais, avec les performances et l'évolutivité Ultra

72 GPU NVIDIA Blackwell dans un domaine NVIDIA NVLink. Le sommet de l'architecture informatique de l'IA.

Système refroidi par air, évolué

Le système refroidi par air le plus vendu a été redessiné et optimisé pour la NVIDIA HGX B200 8-GPU.

Les nouveaux systèmes NVIDIA HGX B200 à 8 GPU refroidis par air offrent une architecture de refroidissement améliorée, une grande configurabilité pour le CPU, la mémoire, le stockage et la mise en réseau, ainsi qu'une facilité d'entretien accrue à l'avant et à l'arrière. Jusqu'à 4 des nouveaux systèmes 8U/10U refroidis par air peuvent être installés et entièrement intégrés dans un rack, offrant la même densité que la génération précédente tout en fournissant jusqu'à 15 fois plus de performances d'inférence et 3 fois plus de performances d'entraînement. Tous les systèmes Supermicro HGX B200 sont équipés d'un rapport GPU/NIC de 1:1 prenant en charge NVIDIA BlueField®-3 ou NVIDIA ConnectX®-7 pour une évolutivité sur une structure informatique haute performance.

Système refroidi par air 8U Front I/O ou 10U Rear I/O

pour NVIDIA HGX B200 8-GPU

Système à refroidissement par air à entrées/sorties frontales offrant une plus grande souplesse de configuration de la mémoire système et une facilité d'entretien de l'allée froide

Refroidissement par air des entrées/sorties arrière pour l'entraînement de modèles linguistiques de grande taille et l'inférence à haut volume

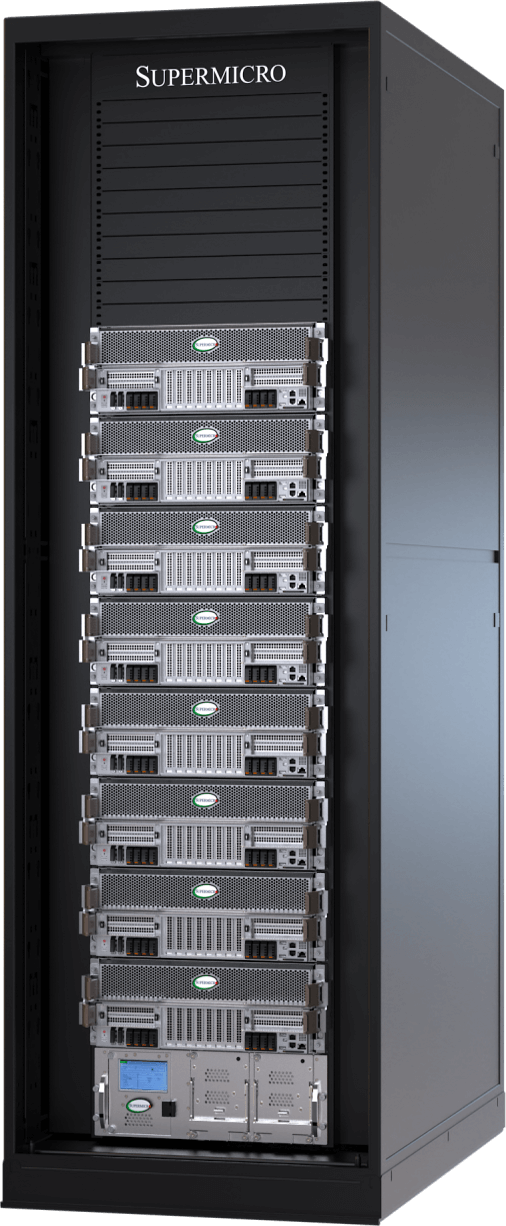

Système de refroidissement liquide de nouvelle génération

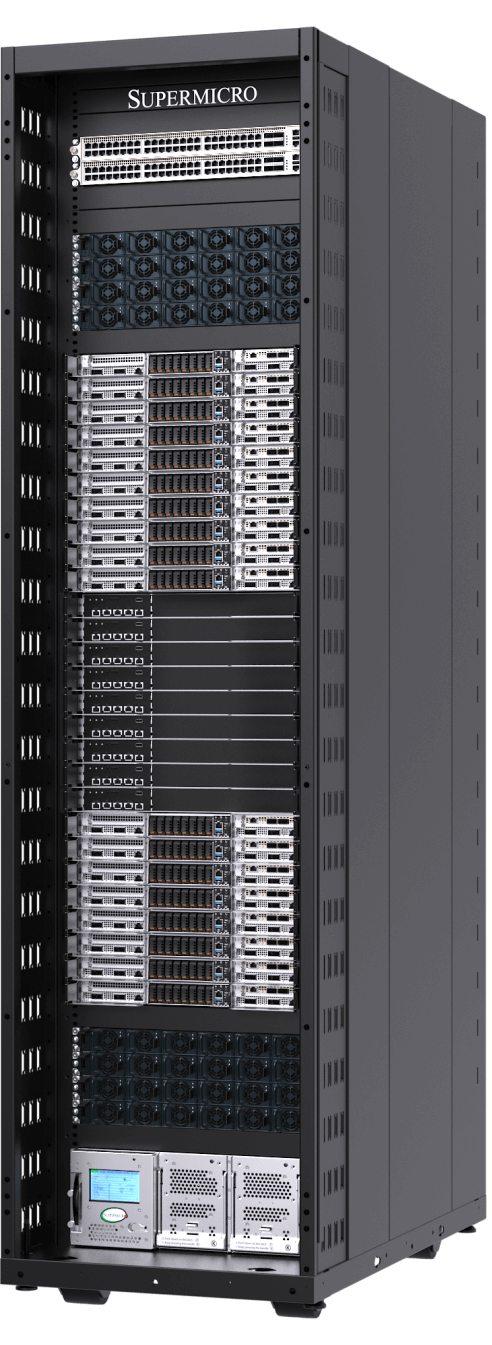

Jusqu'à 96 GPU NVIDIA HGX™ B200 dans un seul rack avec un maximum d'évolutivité et d'efficacité

Le nouveau système NVIDIA HGX B200 8 GPU 4U à refroidissement liquide et E/S avant intègre la technologie DLC-2 Supermicro. Le refroidissement liquide direct capture désormais jusqu'à 92 % de la chaleur générée par les composants du serveur, tels que le CPU, le GPU, le commutateur PCIe, le DIMM, le VRM et le PSU, permettant ainsi jusqu'à 40 % d'économies d'énergie dans les centres de données et un niveau sonore aussi bas que 50 dB. La nouvelle architecture améliore encore l'efficacité et la facilité d'entretien du modèle précédent, qui avait été conçu pour les systèmes NVIDIA HGX H100/H200 à 8 GPU. Disponible en configurations 42U, 48U ou 52U, la conception à l'échelle du rack avec les nouveaux collecteurs de distribution de liquide de refroidissement verticaux (CDM) signifie que les collecteurs horizontaux n'occupent plus d'espace précieux dans les racks. Cela permet d'installer 8 systèmes avec 64 GPU NVIDIA Blackwell dans un rack 42U et jusqu'à 12 systèmes avec 96 GPU NVIDIA dans un rack 52U.

Système à refroidissement liquide 4U Front I/O ou Rear I/O

pour NVIDIA HGX B200 8-GPU

Système DLC-2 à refroidissement liquide pour E/S frontales avec jusqu'à 40 % d'économies d'énergie dans les centres de données et des niveaux de bruit aussi bas que 50 dB

Système d'E/S arrière à refroidissement liquide conçu pour une densité de calcul et des performances maximales

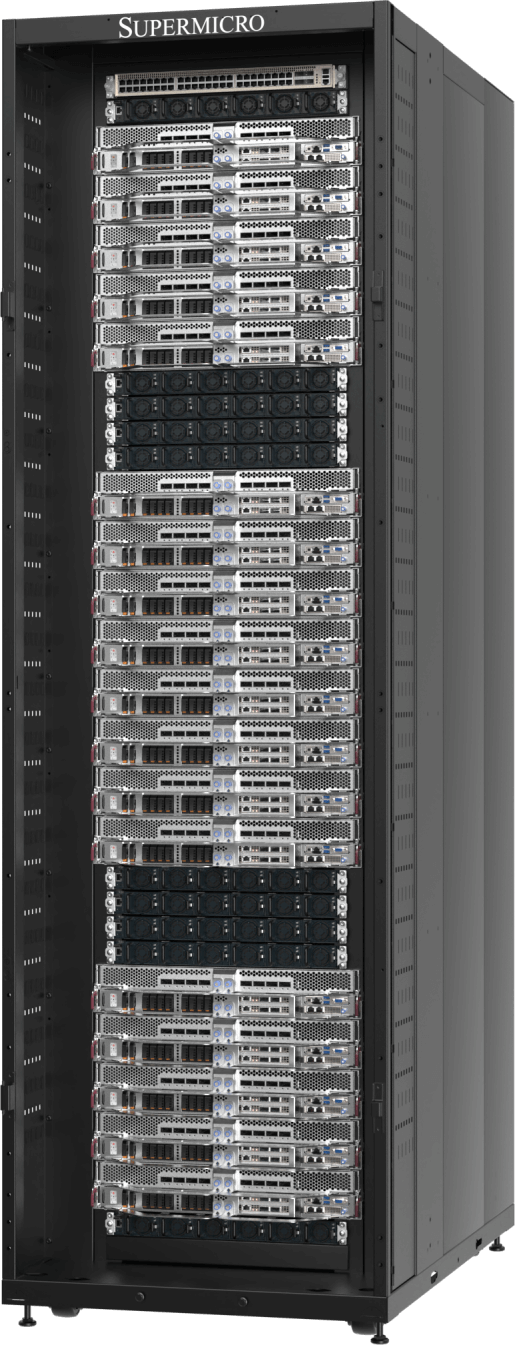

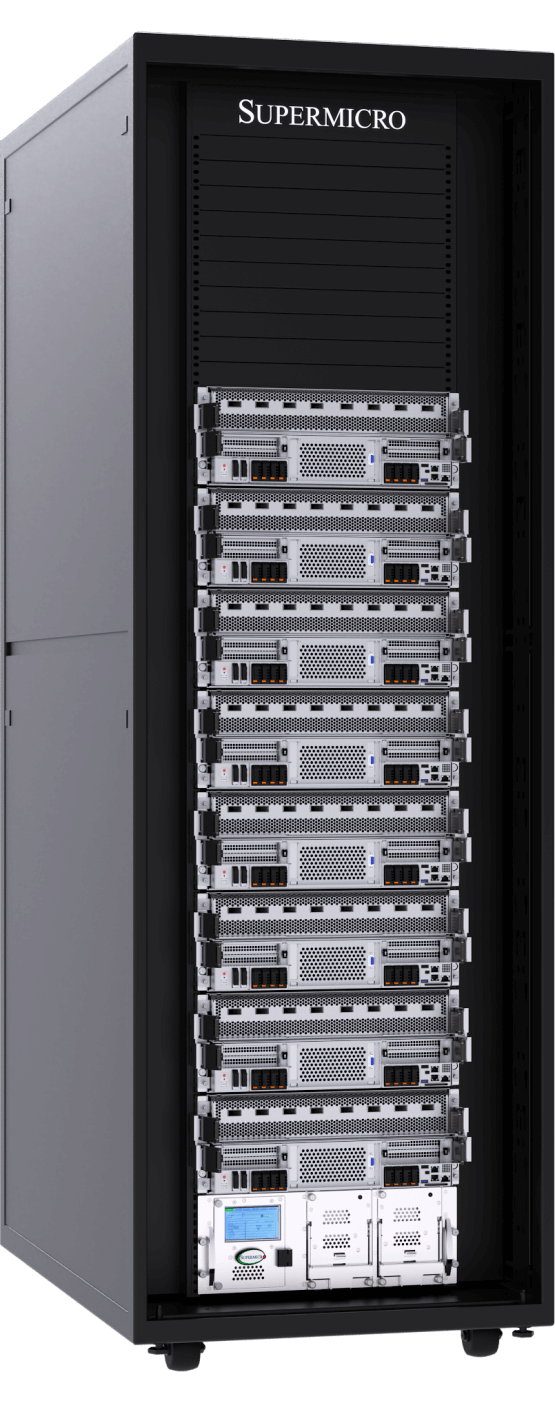

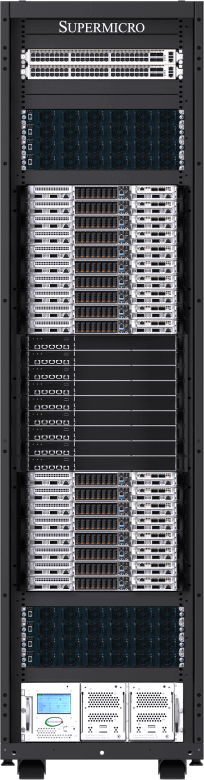

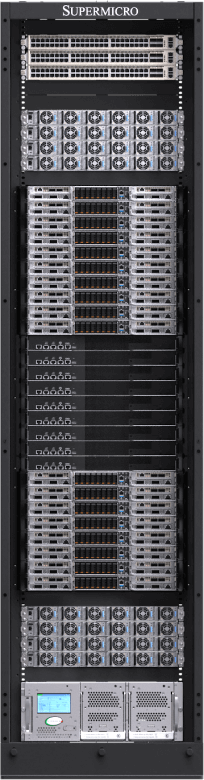

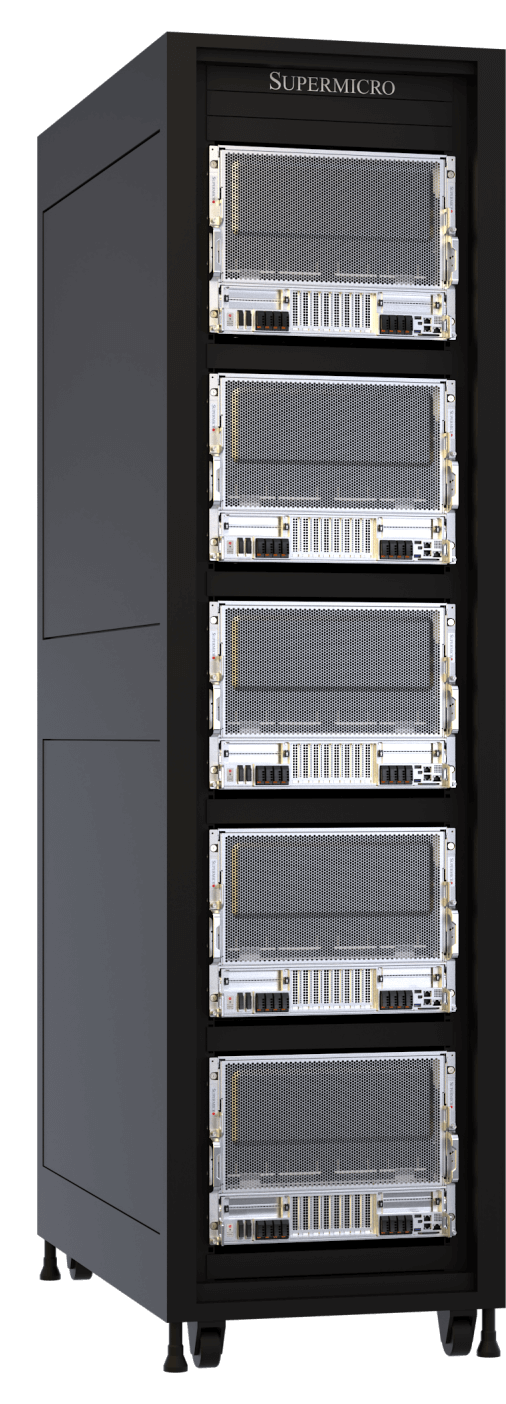

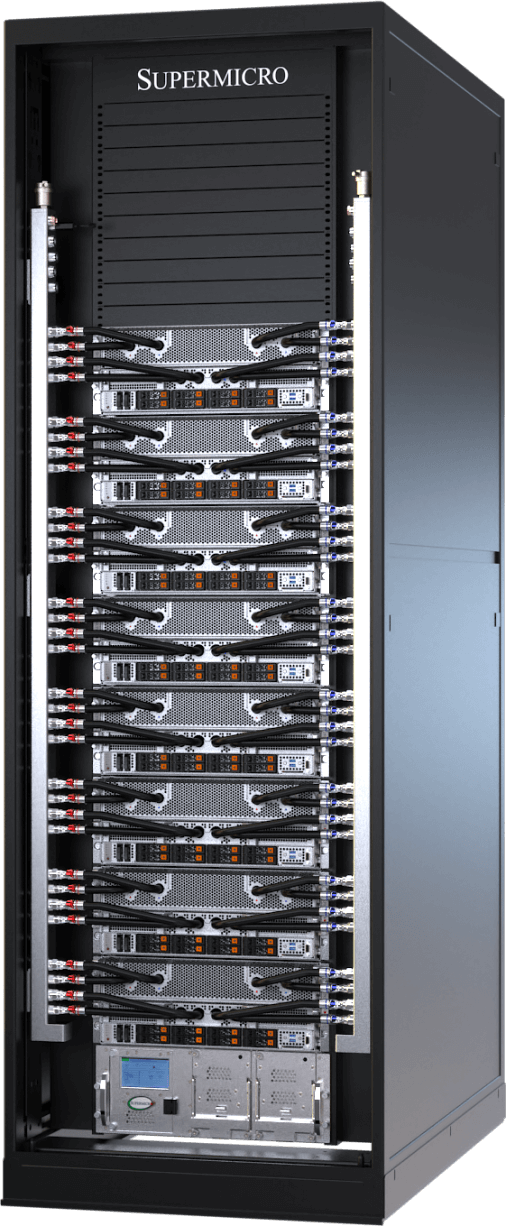

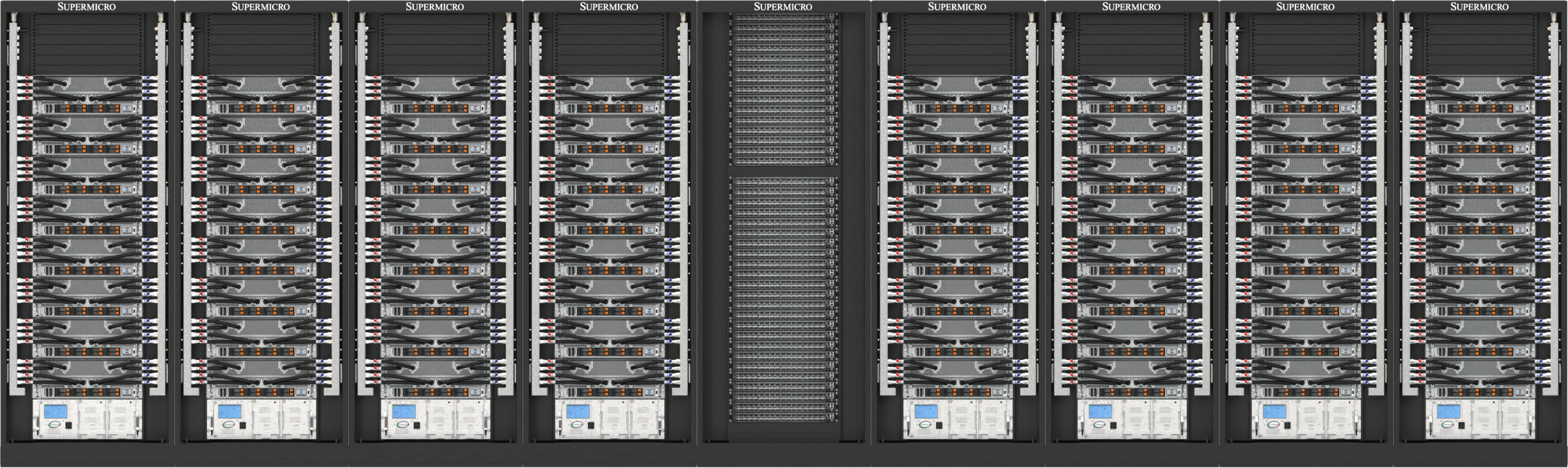

Unité évolutive prête à l'emploi pour NVIDIA Blackwell

Disponibles en configurations rack 42U, 48U ou 52U pour les centres de données refroidis par air ou par liquide, les nouveaux modèles SuperCluster intègrent les réseaux NVIDIA Quantum InfiniBand ou NVIDIA Spectrum™ dans un rack centralisé. Les SuperClusters refroidis par liquide permettent une unité évolutive sans blocage de 256 GPU dans cinq racks 42U/48U ou une unité évolutive étendue de 768 GPU dans neuf racks 52U pour les déploiements de centres de données IA les plus avancés. 256 GPU évolutive dans cinq racks 42U/48U ou une unité évolutive étendue à 768 GPU dans neuf racks 52U pour les déploiements de centres de données IA les plus avancés. Supermicro propose Supermicro une option CDU en ligne pour les déploiements à grande échelle, ainsi qu'une solution de refroidissement par liquide à air qui ne nécessite pas d'eau. La conception du SuperCluster refroidi par air suit l'architecture éprouvée et leader du secteur de la génération précédente, offrant une unité évolutive de 256 GPU dans neuf racks 48U.

Solution complète pour les centres de données et services de déploiement pour NVIDIA Blackwell

Supermicro un fournisseur complet de solutions à guichet unique à l'échelle mondiale, qui propose des solutions de conception de centres de données, des technologies de refroidissement par liquide, des commutateurs, du câblage, une suite logicielle complète de gestion de centres de données, la validation des solutions L11 et L12, l'installation sur site, ainsi qu'une assistance et un service professionnels. Avec des sites de production à San José, en Europe et en Asie, Supermicro une capacité de fabrication inégalée pour les systèmes de racks à refroidissement liquide ou à refroidissement par air, garantissant une livraison rapide, un coût total de possession (TCO) réduit et une qualité constante.

Les solutions NVIDIA Blackwell Supermicrosont optimisées avec les réseaux NVIDIA Quantum InfiniBand ou NVIDIA Spectrum™ dans un rack centralisé pour une évolutivité optimale de l'infrastructure et un clustering GPU, permettant une unité évolutive sans blocage de 256 GPU dans cinq racks ou une unité évolutive étendue de 768 GPU dans neuf racks. Cette architecture, avec prise en charge native du logiciel NVIDIA Enterprise, s'associe à l'expertise Supermicrodans le déploiement des plus grands centres de données à refroidissement liquide au monde pour offrir une efficacité supérieure et un temps de mise en ligne inégalé pour les projets de centres de données IA les plus ambitieux d'aujourd'hui.

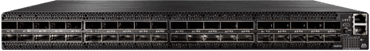

NVIDIA Quantum InfiniBand et Spectrum Ethernet

Des serveurs d'entreprise mainstream aux supercalculateurs haute performance, la technologie réseau NVIDIA Quantum InfiniBand et Spectrum™ active le réseau de bout en bout le plus évolutif, le plus rapide et le plus sécurisé.

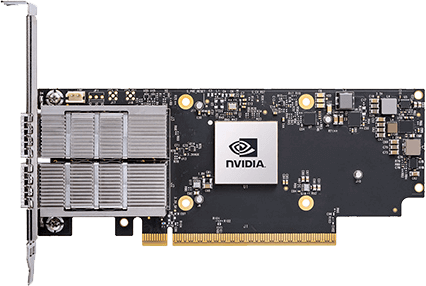

NVIDIA SuperNICs

Supermicro à la pointe de l'adoption des SuperNIC NVIDIA : NVIDIA ConnectX pour InfiniBand et NVIDIA BlueField-3 SuperNIC pour Ethernet. Tous les systèmes NVIDIA HGX B200 Supermicrosont équipés d'une connexion réseau 1:1 vers chaque GPU afin de permettre l'utilisation de NVIDIA GPUDirect RDMA (InfiniBand) ou RoCE (Ethernet) pour le calcul IA massivement parallèle.

Logiciel d'entreprise NVIDIA AI

Accès complet aux cadres d'application, API, SDK, boîtes à outils et optimiseurs de NVIDIA, et possibilité de déployer des blueprints d'IA, des NVIDIA NIM, des RAG et les derniers modèles de fondation d'IA optimisés. NVIDIA AI Enterprise Software rationalise le développement et le déploiement d'applications d'IA de niveau production avec une sécurité, un support et une stabilité de niveau entreprise pour assurer une transition en douceur du prototype à la production.