Did you know, training a single AI model can emit as much carbon as five cars in their lifetimes? 5 Tips to Reduce the Environmental Impact!

Researchers at the University of Massachusetts, Amherst, performed a life cycle assessment for training several common large AI models. They found that the process can emit more than 626,000 pounds of carbon dioxide which is equivalent to approximately five times the lifetime emissions of an average American car (includes manufacturing of the car itself).

Studies have the found that: The carbon footprint of AI training is significant and is due to the energy required to power the computers that are used to train the models. The carbon emissions associated with AI training can be reduced by using renewable energy to power the data centers.

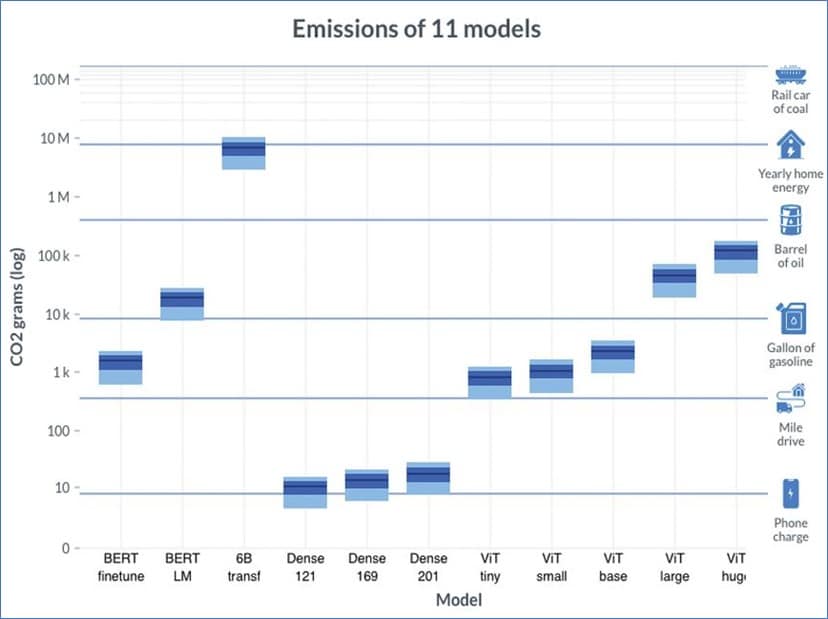

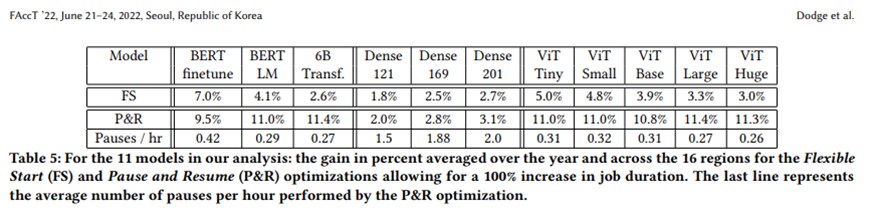

Efficient algorithms reduce carbon emissions by reducing energy needed to train AI models, such as, Approximate algorithms that provide good solutions to problems without guaranteeing the best possible solution, Or Data-driven algorithms that are trained on data. The table below shows the results of using two optimization algorithms: Flexible Start, that allows flexibility for AI workloads of shorter jobs, and Pause & Resume that pauses & resumes according to a Threshold, for longer jobs.

Pausing an AI workload when regional emissions are high can reduce overall totals. The savings can be significant, up to 25% for very long runs. The savings are lower for short runs, because the doubled duration is still relatively short. The table also shows that the number of pauses per hour increases with the size of the model. This is because larger models require more computing power, and therefore take longer to train.

Five tips & innovative technologies to help reduce the carbon emissions of AI training

- Use energy-efficient hardware, which may include GPUs.

- GPUs are more energy-efficient than CPUs on a work per watt comparison for certain workloads. GPUs can be used to train AI models faster and thus use less energy than a pure CPU environment.

- Liquid cooling can be used to cool computing hardware more efficiently, thus reducing energy consumption and emissions in the data centers. And can reduce the noise by up to 50% and create a more comfortable working environment. It is easier to maintain than air cooling and Liquid coolers are less likely to be damaged.

- Optimize data centers for energy efficiency. There are several ways to reduce the data centers’ energy required, such as using free air cooling which reduces the PUE. More efficient cooling systems: Traditional air-cooled data centers use a significant amount of energy to cool the servers.

- More efficient power supplies: Conventional power supplies can be inefficient, wasting up to 20% of the energy they use. Make sure to use Titanium or Platinum power supplies.

- More efficient servers: Use multi-node servers which can share resources which thus lowers overall energy use per server.

- Use renewable energy to power AI training. Data centers that focus on AI training can be powered by renewable energy sources: solar or wind power.

- Improve the efficiency of AI training. Innovative technologies such as quantum computing, spiking neural networks, federated learning, transfer learning, and neural architecture search can improve the efficiency of AI training and reduce energy usage.

- Use pre-trained models. Pre-trained models have already been trained on a large dataset and don’t have to be developed from scratch, which consumes energy.

Follow these tips to make AI more sustainable.

Sources:

Measuring AI’s Carbon Footprint - IEEE Spectrum

Energy-Efficient Computing Archives - Cambridge Open Zettascale Lab

Measuring the Carbon Intensity of AI in Cloud Instances (facctconference.org)