Your NVIDIA Blackwell Journey Starts Here

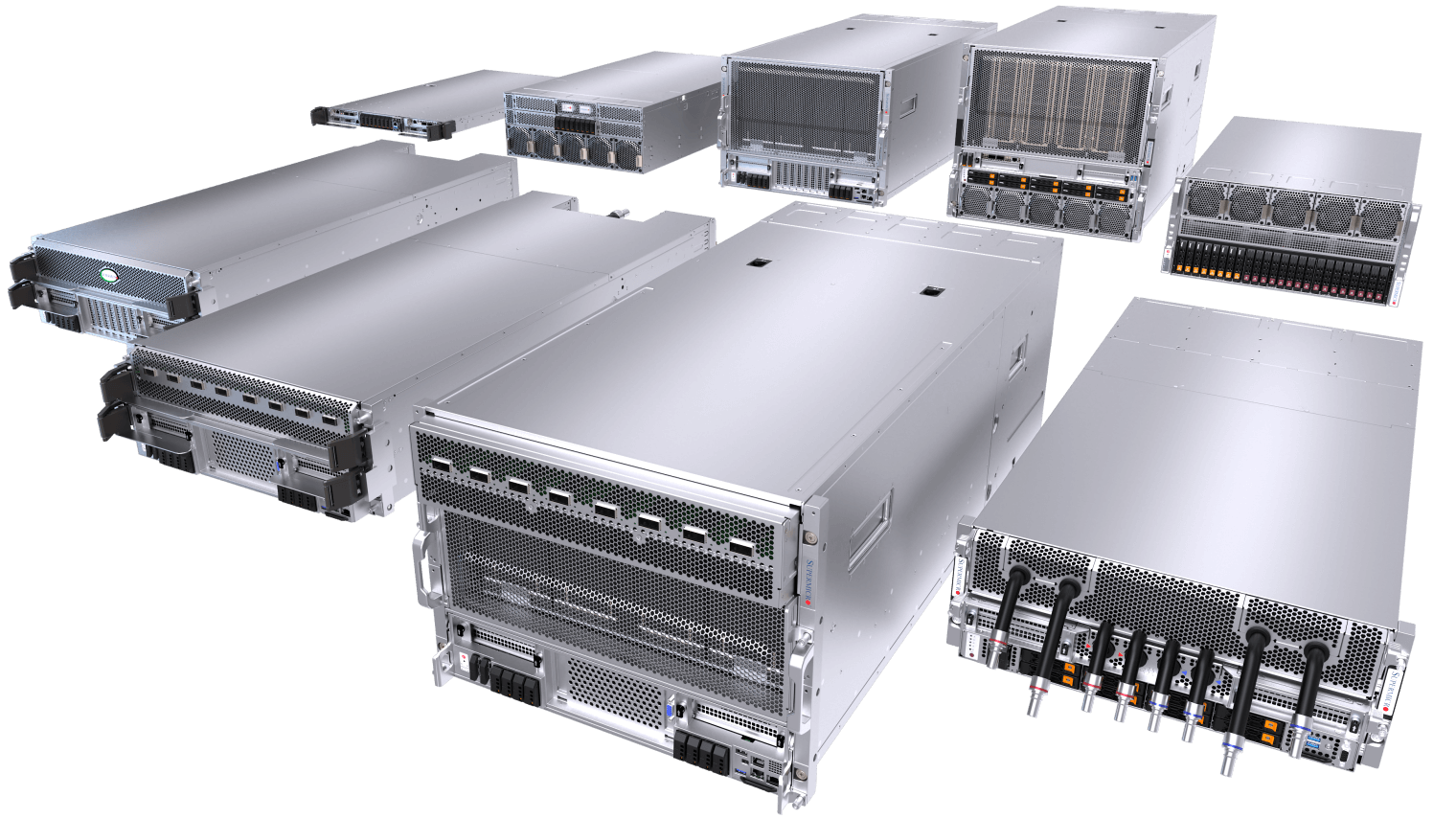

In this transformative moment of AI, where the evolving scaling laws continue to push limits of data center capabilities, our latest NVIDIA Blackwell-powered solutions, developed through close collaboration with NVIDIA, offers unprecedented computational performance, density, and efficiency with next-generation air-cooled and liquid-cooled architecture. With our readily deployable AI Data Center Building Block solutions, Supermicro is your premier partner to start your NVIDIA Blackwell journey, providing sustainable, cutting-edge solutions that accelerate AI innovations.

End-to-End AI Data Center Building Block Solutions Advantage

A broad range of air-cooled and liquid-cooled systems with multiple CPU options, a full data center management software suite, turn-key rack level integration with full networking, cabling, and cluster level L12 validation, global delivery, support and service.

- Vast Experience

- Supermicro’s AI Data Center Building Block Solutions power the largest liquid-cooled AI Data Center deployment in the World.

- Flexible Offerings

- Air or liquid-cooled, GPU-optimized, multiple system and rack form factors, CPUs, storage, networking options. Optimizable for your needs.

- Liquid-Cooling Pioneer

- Proven, scalable, and plug-and-play liquid-cooling solutions to sustain the AI revolution. Designed specifically for NVIDIA Blackwell Architecture.

- Fast Time to Online

- Accelerated delivery with global capacity, world class deployment expertise, one-site services, to bring your AI to production, fast.

Ultra Performance for AI Reasoning

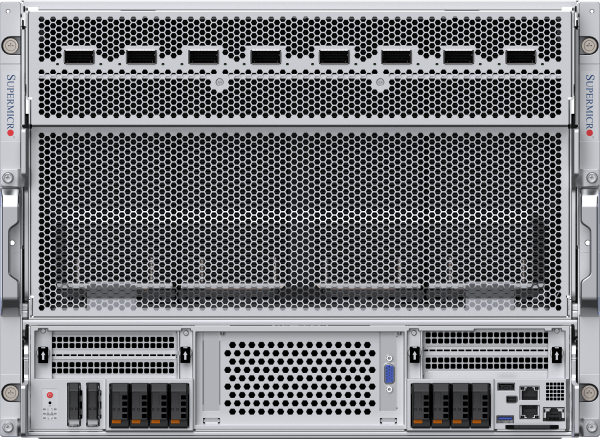

The Most Advanced Air-cooled and Liquid-cooled Architecture for NVIDIA HGX™ B300

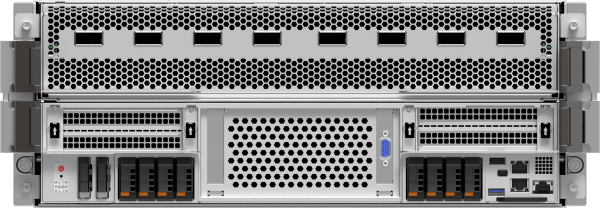

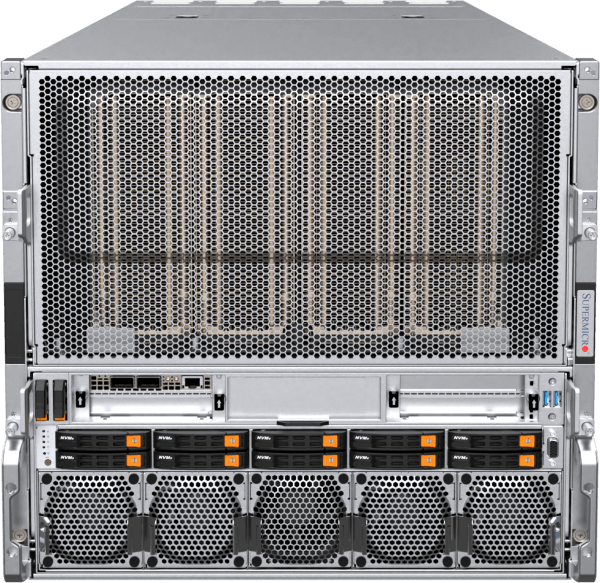

The Supermicro NVIDIA HGX platform powers many of the world's largest AI clusters, delivering computational output for today's transformative AI applications. Now featuring NVIDIA Blackwell Ultra, the 8U air-cooled system maximizes performance of eight 1100W HGX B300 GPUs with 2.3TB total HBM3e memory. Eight front OSFP ports with integrated ConnectX®-8 SuperNICs at 800 Gb/s enable turnkey deployment of NVIDIA Quantum-X800 InfiniBand or Spectrum-X™ Ethernet clusters. The 4U liquid-cooled system features DLC-2 technology with 98% heat capture, achieving 40% data center power savings. Supermicro Data Center Building Block Solutions® (DCBBS) and onsite deployment expertise provide complete solutions for liquid cooling, network topology and cabling, power delivery, and thermal management to accelerate AI factory time-to-online.

8U Air-cooled or 4U Liquid-cooled System

for NVIDIA HGX B300 8-GPU

Front I/O air-cooled system for NVIDIA Blackwell Ultra HGX B300 8-GPU with integrated NVIDIA ConnectX-8 networking

(Coming Soon) Front I/O liquid-cooled system with integrated NVIDIA ConnectX-8 800 Gb/s networking

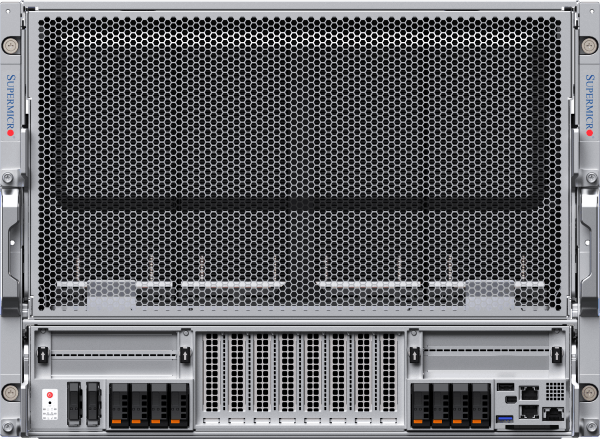

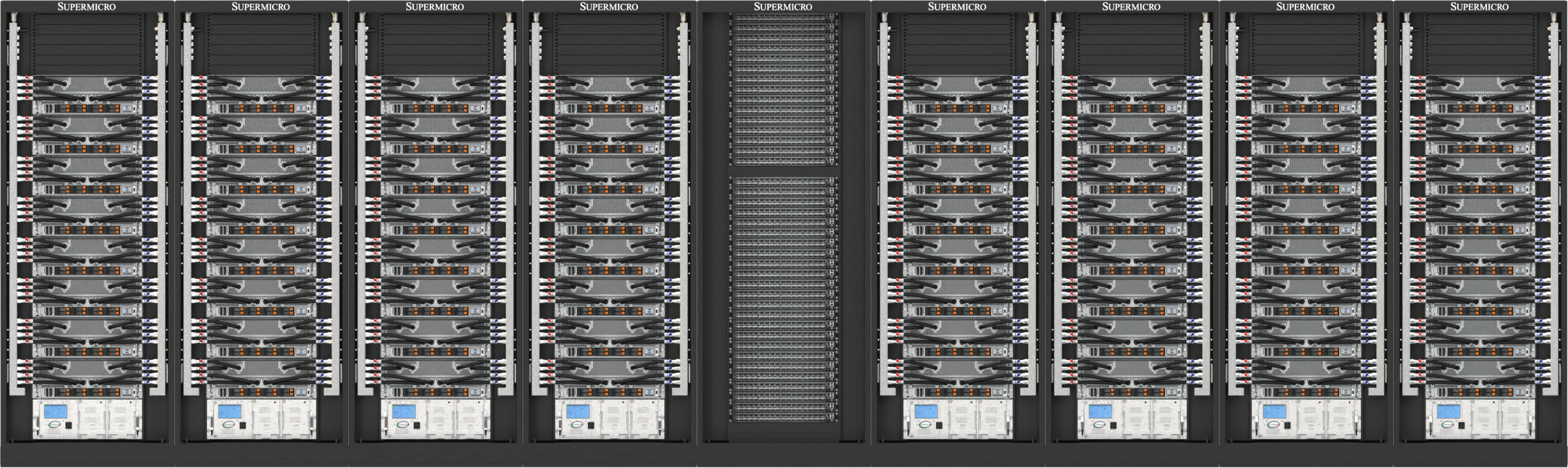

An Exascale of Compute in a Rack

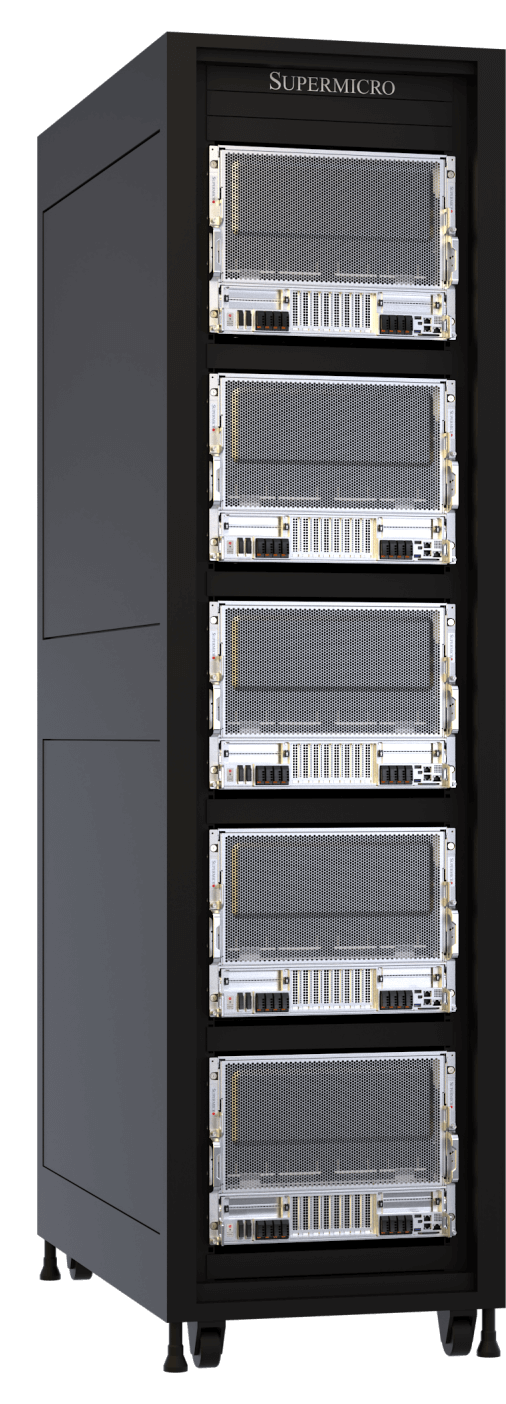

End-to-end Liquid-cooling Solution for NVIDIA GB300 NVL72

The Supermicro NVIDIA GB300 NVL72 tackles AI computational demands from training foundational models to large-scale reasoning inference. It combines high AI performance with Supermicro's direct liquid cooling technology, enabling maximum computing density and efficiency. Based on NVIDIA Blackwell Ultra, a single rack integrates 72 NVIDIA B300 GPUs with 288GB HBM3e memory each. With 1.8TB/s NVLink interconnects, the GB300 NVL72 operates as an exascale supercomputer in a single node. Upgraded networking doubles performance across compute fabric, supporting 800 Gb/s speeds. Supermicro's manufacturing capacity and end-to-end services accelerate liquid-cooled AI factory deployment and speed time-to-market for GB300 NVL72 clusters.

NVIDIA GB300 NVL72 and GB200 NVL72

for NVIDIA GB300/GB200 Grace™ Blackwell Superchip

72 NVIDIA Blackwell Ultra GPUs in one NVIDIA NVLink domain. Now with Ultra performance and scalability

72 NVIDIA Blackwell GPUs in one NVIDIA NVLink domain. The apex of AI computing architecture.

Air-Cooled System, Evolved

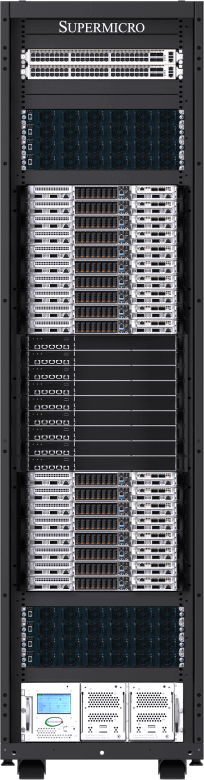

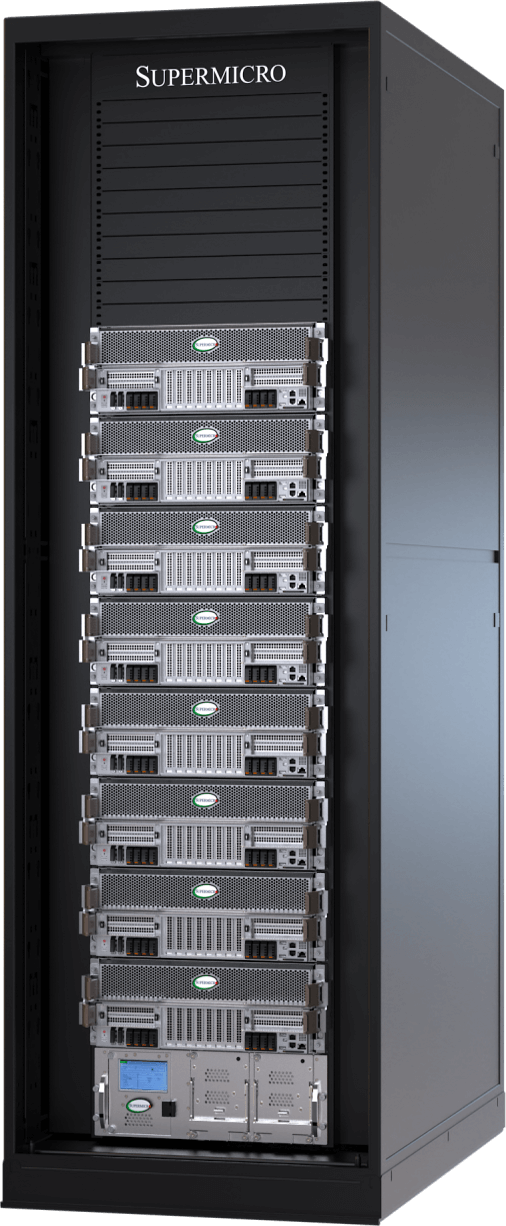

The Best Selling Air-cooled System Redesigned and Optimized for NVIDIA HGX B200 8-GPU

The new air-cooled NVIDIA HGX B200 8-GPU systems feature enhanced cooling architecture, high configurability for CPU, memory, storage, and networking, and improved serviceability from the front or rear side. Up to 4 of the new 8U/10U air-cooled systems can be installed and fully integrated in a rack, achieving the same density as the previous generation while providing up to 15x inference and 3x training performance. All Supermicro NVIDIA HGX B200 systems are equipped with a 1:1 GPU-to-NIC ratio supporting NVIDIA BlueField®-3 or NVIDIA ConnectX®-7 for scaling across a high-performance computing fabric.

8U Front I/O or 10U Rear I/O Air-cooled System

for NVIDIA HGX B200 8-GPU

Front I/O air-cooled system with enhanced system memory configuration flexibility and cold aisle serviceability

Rear I/O air-cooled design for large language model training and high volume inference

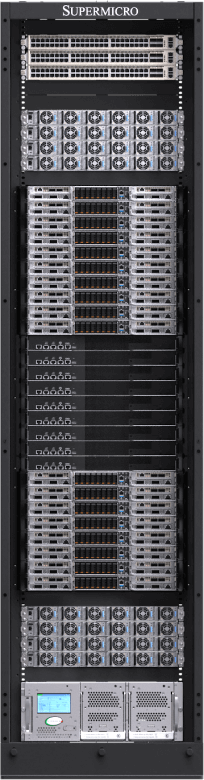

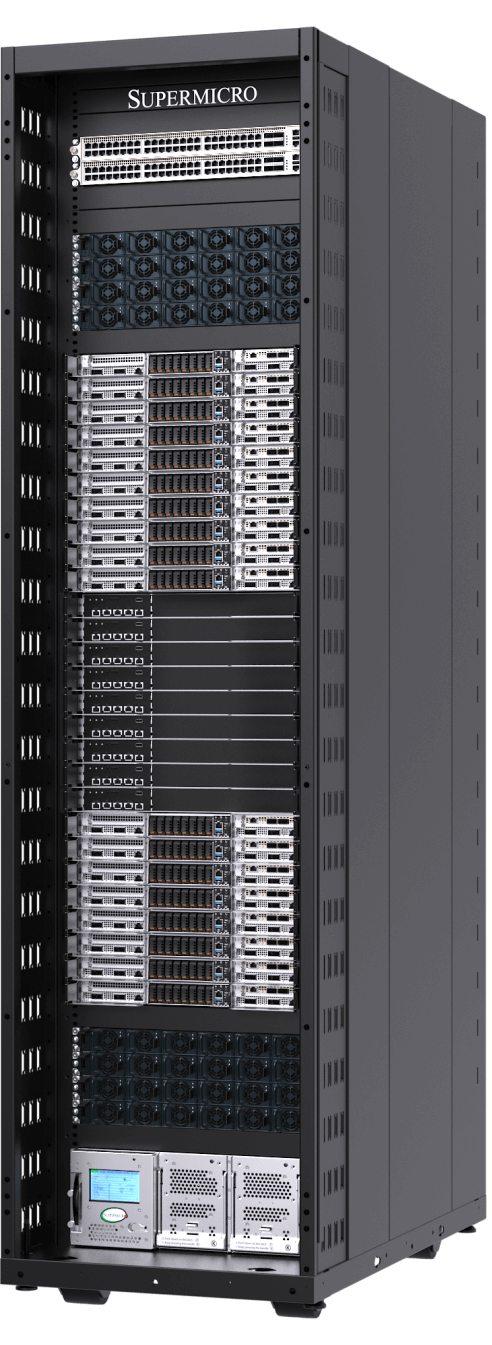

Next-Gen Liquid-Cooled System

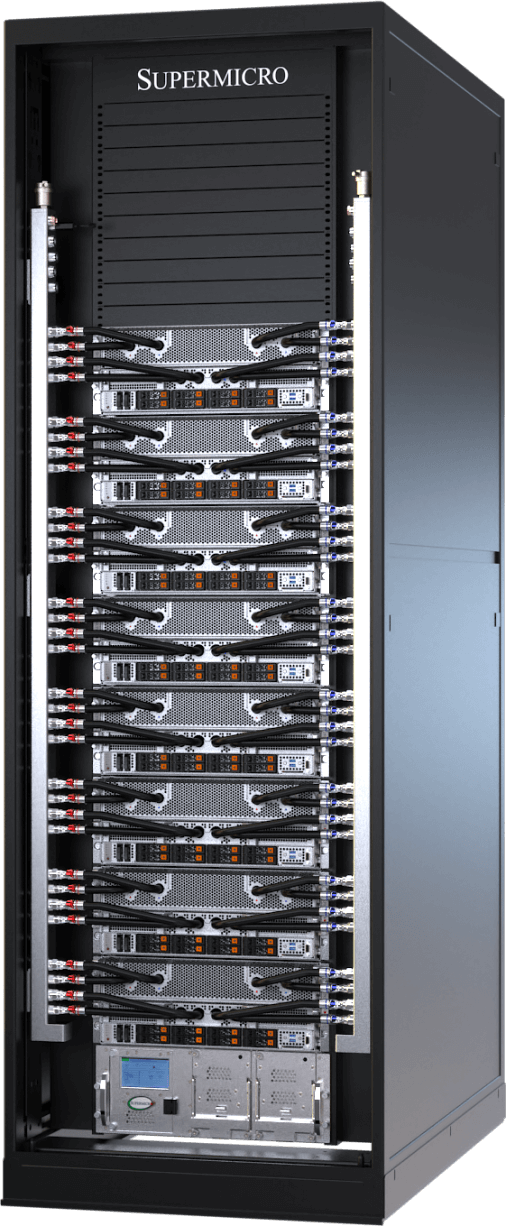

Up to 96 NVIDIA HGX™ B200 GPUs in a Single Rack with Maximum Scalability and Efficiency

The new front-I/O liquid-cooled 4U NVIDIA HGX B200 8-GPU system features Supermicro's DLC-2 technology. Direct liquid cooling now captures up to 92% of the heat generated by server components, such as CPU, GPU, PCIe switch, DIMM, VRM, and PSU, allowing up to 40% data center power savings and as low as a 50dB noise level. The new architecture further enhances efficiency and serviceability of the predecessor that was designed for NVIDIA HGX H100/H200 8-GPU systems. Available in 42U, 48U, or 52U configurations, the rack-scale design with the new vertical coolant distribution manifolds (CDM) means that horizontal manifolds no longer occupy valuable rack units. This enables 8 systems with 64 NVIDIA Blackwell GPUs in a 42U rack and up to 12 systems with 96 NVIDIA GPUs in a 52U rack.

4U Front I/O or Rear I/O Liquid-cooled System

for NVIDIA HGX B200 8-GPU

Front I/O DLC-2 liquid-cooled system with up to 40% data center power savings and noise levels as low as 50dB

Rear I/O liquid-cooled system designed for maximum compute density and performance

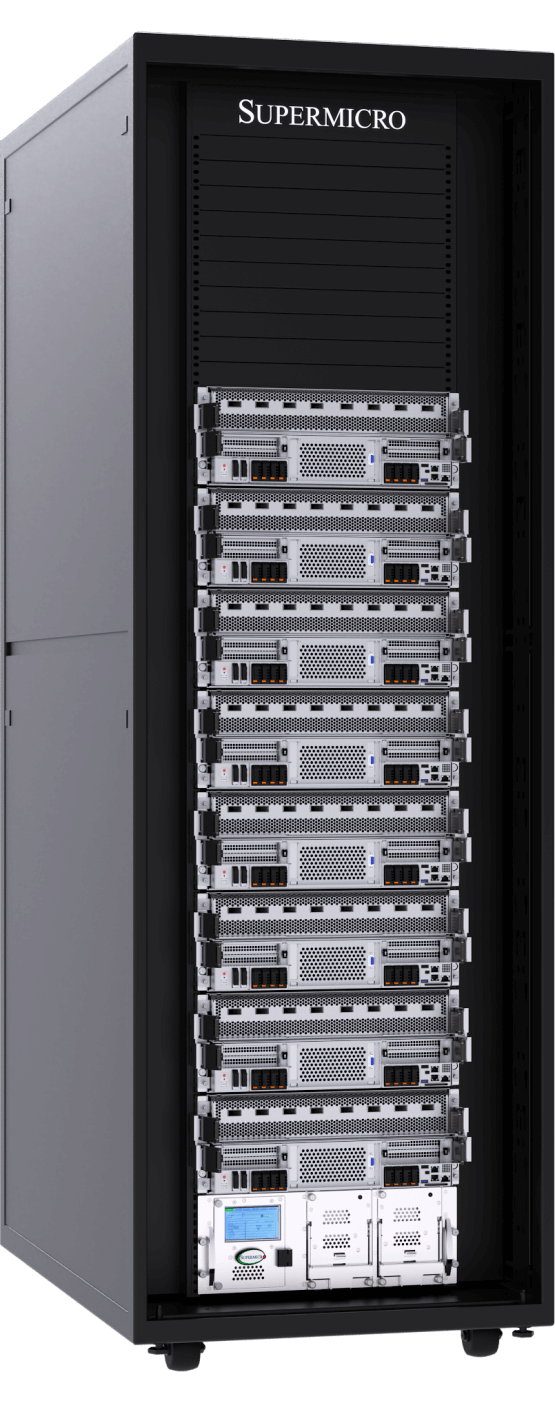

Plug-and-Play Scalable Unit Readily Deployable for NVIDIA Blackwell

Available in 42U, 48U, or 52U rack configurations for Air-cooled or liquid-cooled data centers, the new SuperCluster designs incorporate NVIDIA Quantum InfiniBand or NVIDIA Spectrum™ networking in a centralized rack, the liquid-cooled SuperClusters enabling a non-blocking, 256-GPU scalable unit in five 42U/48U racks or an extended 768-GPU scalable unit in nine 52U racks for the most advanced AI data center deployments. Supermicro also offers an in-row CDU option for large deployments, as well as liquid-to-air cooling rack solution that doesn't require facility water. The air-cooled SuperCluster design follows the proven, industry leading architecture of the previous generation, providing a 256-GPU scalable unit in nine 48U racks.

End-to-end Data Center Building Block Solution and Deployment Services for NVIDIA Blackwell

Supermicro serves as a comprehensive one-stop solution provider with global manufacturing scale, delivering data center-level solution design, liquid-cooling technologies, switching, cabling, a full data center management software suite, L11 and L12 solution validation, onsite installation, and professional support and service. With production facilities across San Jose, Europe, and Asia, Supermicro offers unmatched manufacturing capacity for liquid-cooled or air-cooled rack systems, ensuring timely delivery, reduced total cost of ownership (TCO), and consistent quality.

Supermicro’s NVIDIA Blackwell solutions are optimized with NVIDIA Quantum InfiniBand or NVIDIA Spectrum™ networking in a centralized rack for the best infrastructure scaling and GPU clustering, enabling a non-blocking, 256-GPU scalable unit in five racks or an extended 768-GPU scalable unit in nine racks. This architecture, with native support for NVIDIA Enterprise software, combines with Supermicro’s expertise in deploying the world’s largest liquid-cooled data centers to deliver superior efficiency and unmatched time-to-online for today's most ambitious AI data center projects.

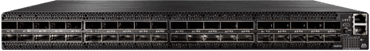

NVIDIA Quantum InfiniBand and Spectrum Ethernet

From mainstream enterprise servers to high-performance supercomputers, the NVIDIA Quantum InfiniBand and Spectrum™ networking technology activate the most scalable, fastest, and secure end-to-end networking.

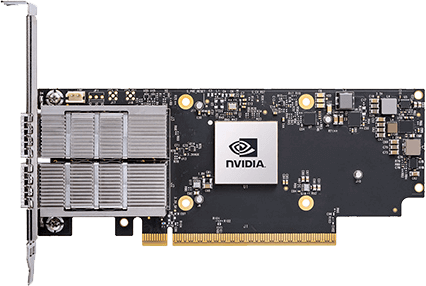

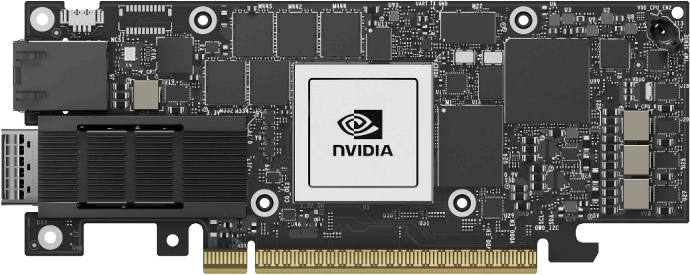

NVIDIA SuperNICs

Supermicro is at the forefront of adopting NVIDIA SuperNICs: NVIDIA ConnectX for InfiniBand and NVIDIA BlueField-3 SuperNIC for Ethernet. All of Supermicro’s NVIDIA HGX B200 systems are equipped with 1:1 networking to each GPU to enable NVIDIA GPUDirect RDMA (InfiniBand) or RoCE (Ethernet) for massively parallel AI computing.

NVIDIA AI Enterprise Software

Full access to NVIDIA application frameworks, APIs, SDKs, toolkits and optimizers, along with ability to deploy AI blueprints, NVIDIA NIMs, RAGs, and the latest optimized AI foundation models. NVIDIA AI Enterprise Software streamlines development and deployment of production-grade AI applications with enterprise-grade security, support and stability to ensure smooth transition from prototype to production.