Instinct™MI350 系列/MI325X

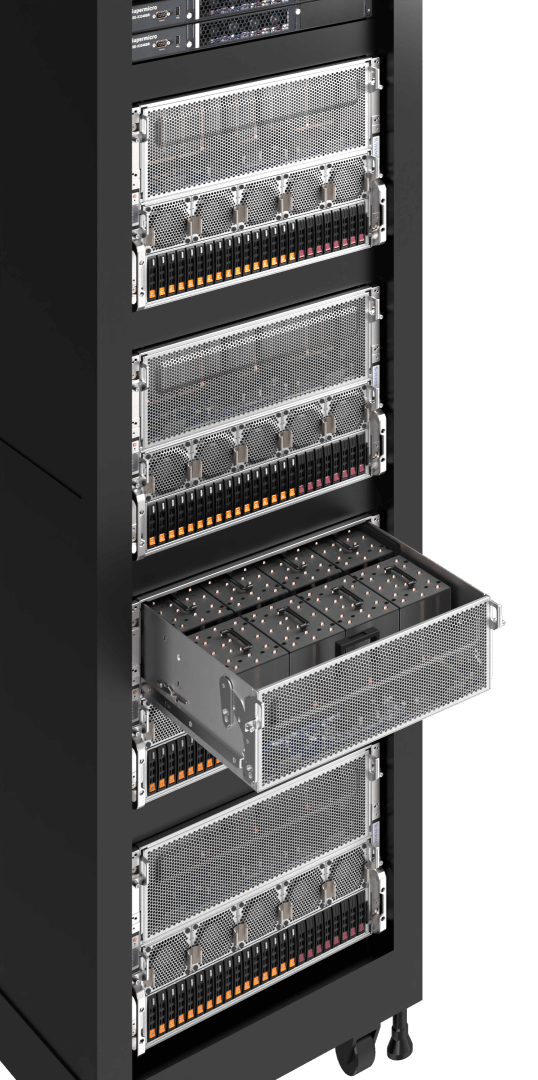

Supermicro 透過搭載第五代AMD 處理器與AMD MI350 系列 GPUSupermicro 規模基礎架構的強大效能。此伺服器採用我們久經考驗的AI 系統架構打造。 採用基於產業標準的通用主機板(UBB 2.0)架構,配備AMD MI350系列加速器及超過2 TB的HBM3e記憶體,可高效處理最嚴苛AI 。

資源

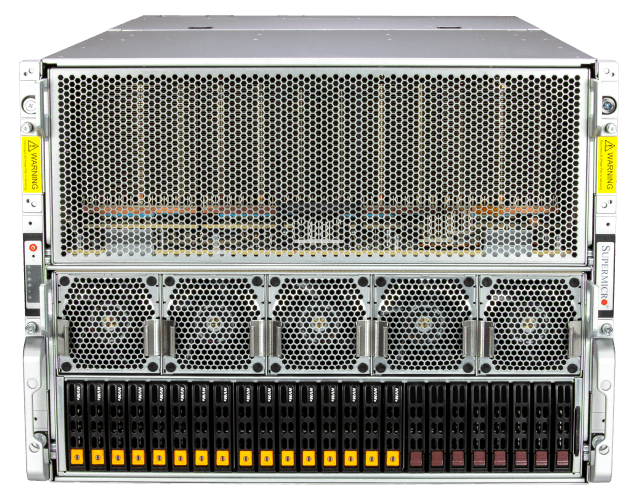

MI325X 風冷與液冷系統

- MI355X

風冷式 - MI350X,

MI325X,

風冷式 - MI355X、

MI325X

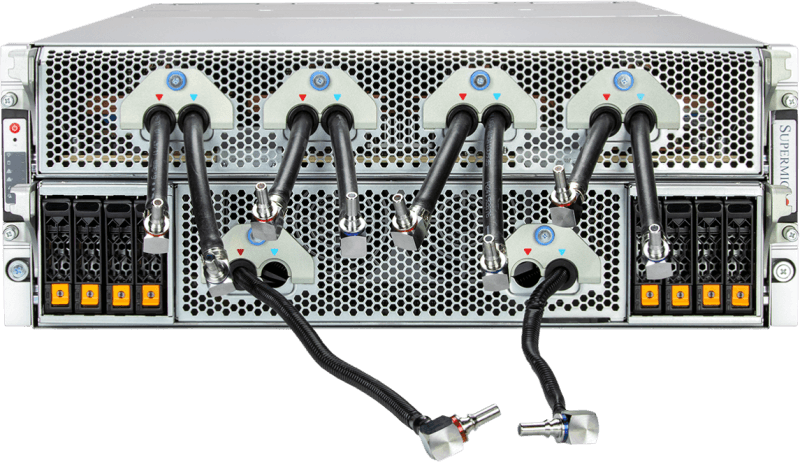

液冷式

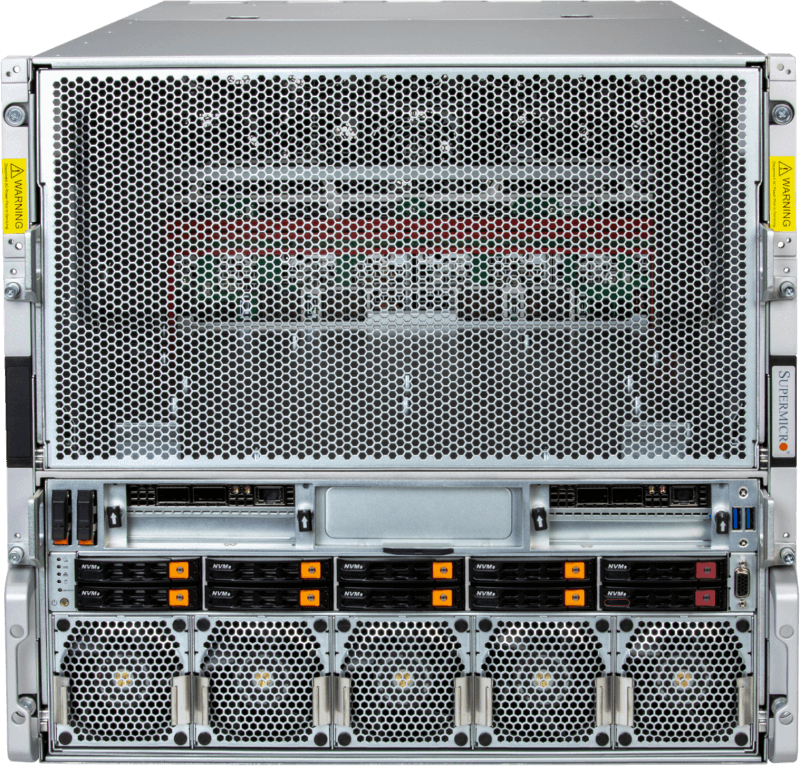

DPAMD 系統搭載AMD 8-GPU

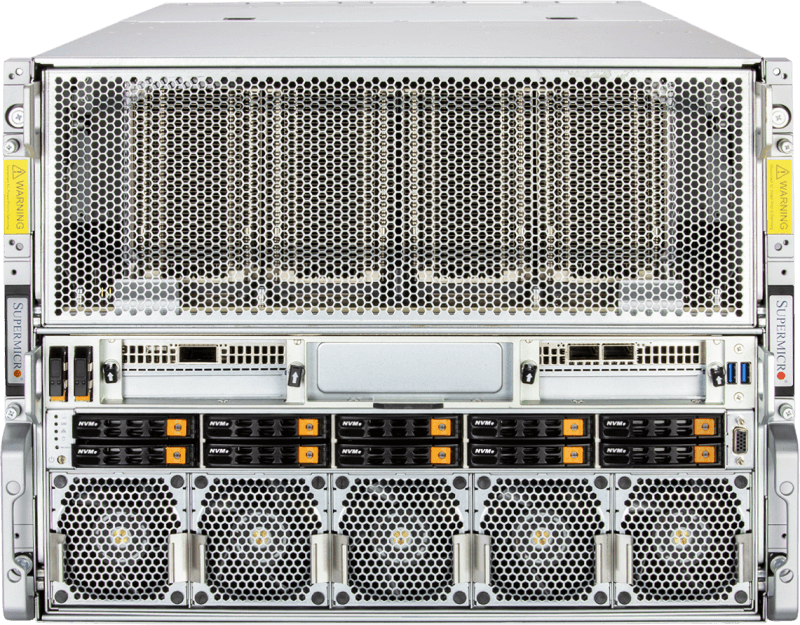

DPAMD 系統搭載AMD 8-GPU

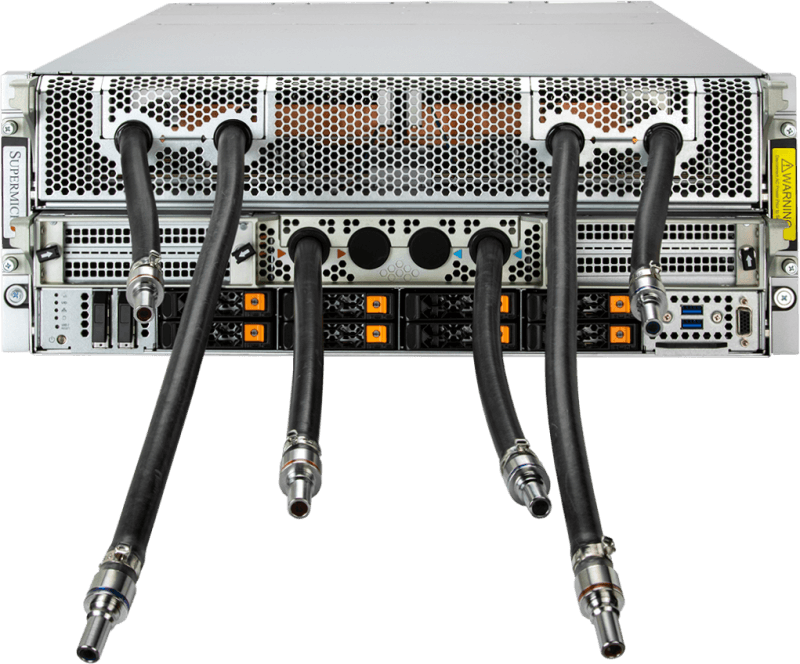

DPAMD 系統,配備AMD 8-GPU與液冷系統

Instinct™MI300X/A

MI300X 8-GPU系統搭載AMD Infinity Fabric™連結技術,於開放標準平台上實現高達896 GB/s的峰值理論點對點I/O頻寬,單一伺服器節點配備業界領先的1.5 TBHBM3 GPU記憶體,並原生支援稀疏矩陣運算。此設計旨在為AI 節省電力、降低運算週期並減少記憶體使用。

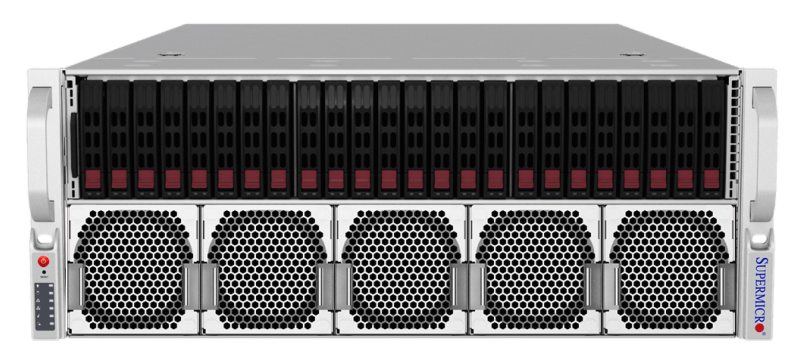

針對HPC AI 資料中心級多處理器系統。Supermicro風冷4U與液冷2U四路APU系統AMD MI300A,此解決方案融合CPU與GPU效能,Supermicro在多處理器系統架構與散熱設計的專業技術,經精密調校以應對AI HPC的融合趨勢。

資源

MI300X/MI300A 風冷與液冷系統

- MI300X

風冷式 - MI300X 液冷式

- MI300A

風冷式 - MI300A

液冷式

DP 8U4-GPUOAM 架構,配備CPU 互連技術

DP 4U四 GPUOAM 架構搭配液冷解決方案

DP 4U4-APU架構搭配AMD Fabric™ 連結技術

DP 2U4-APU架構搭配液冷解決方案

資源