通过Supermicro前沿SupermicroAI就绪型基础设施解决方案,释放人工智能的全部潜能。从大规模训练到智能边缘推理,我们的交钥匙参考设计可简化并加速AI部署。在优化成本、降低环境影响的同时,为您的工作负载提供最佳性能和可扩展性。探索Supermicro多样化的AI工作负载优化解决方案,开启无限可能,加速业务发展的每个环节。

大规模人工智能训练与推理

大型语言模型、生成式人工智能训练、自动驾驶、机器人学

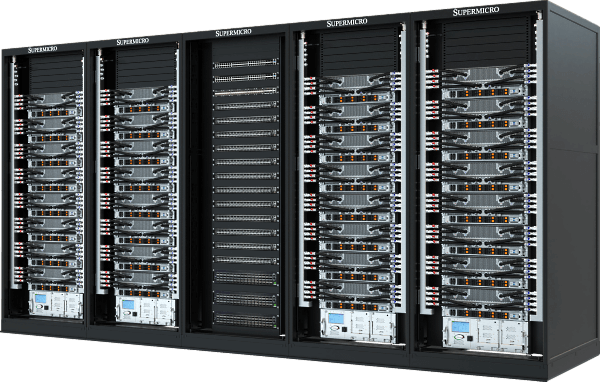

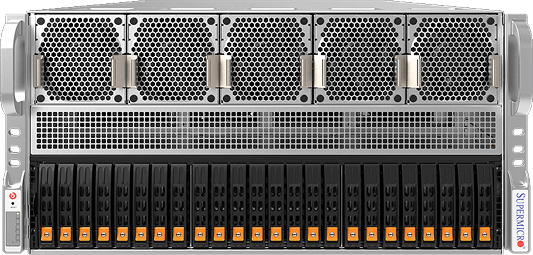

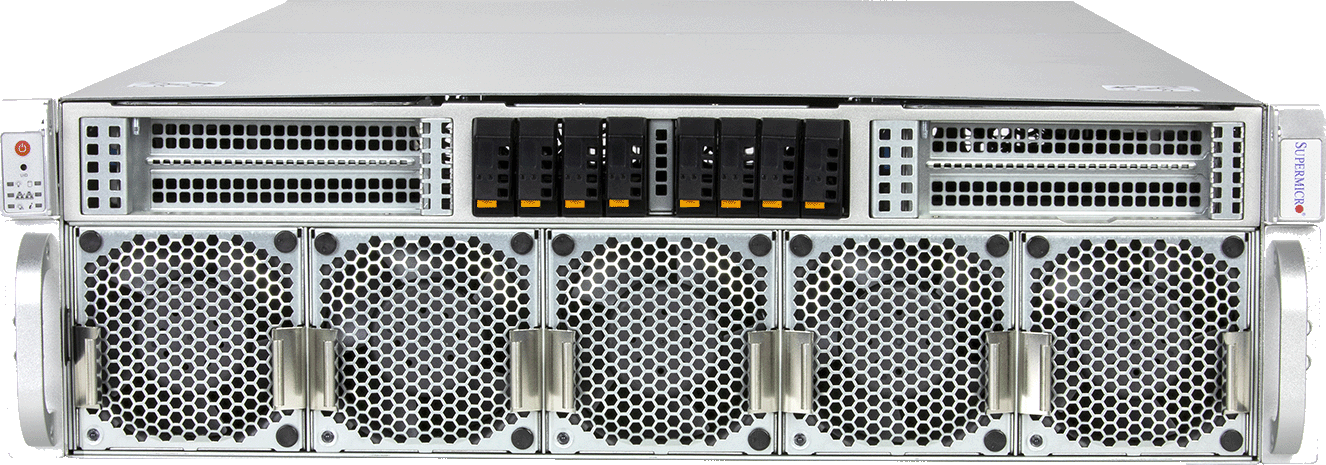

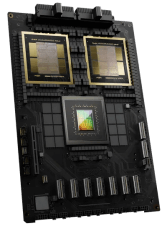

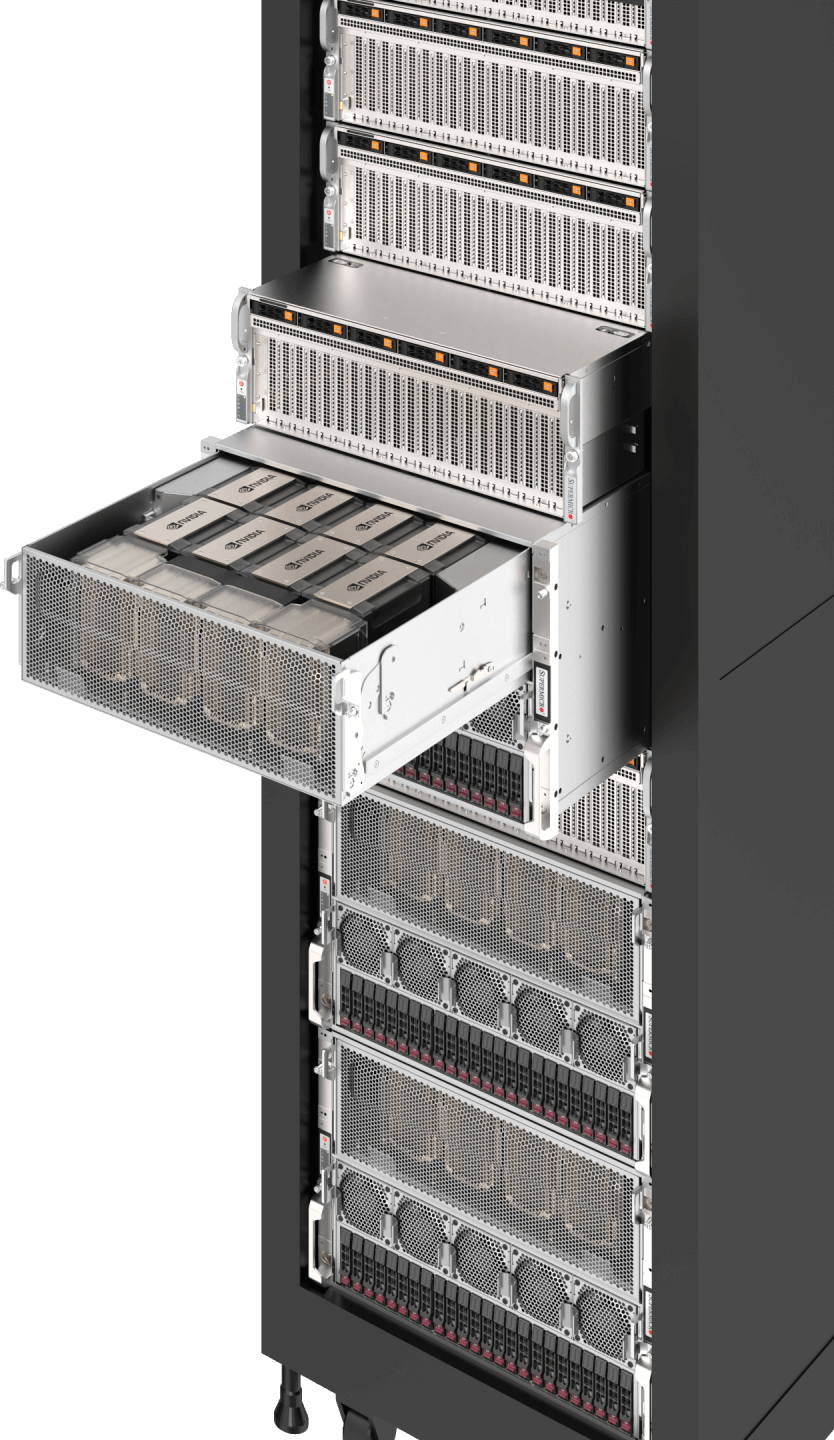

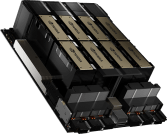

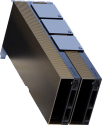

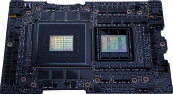

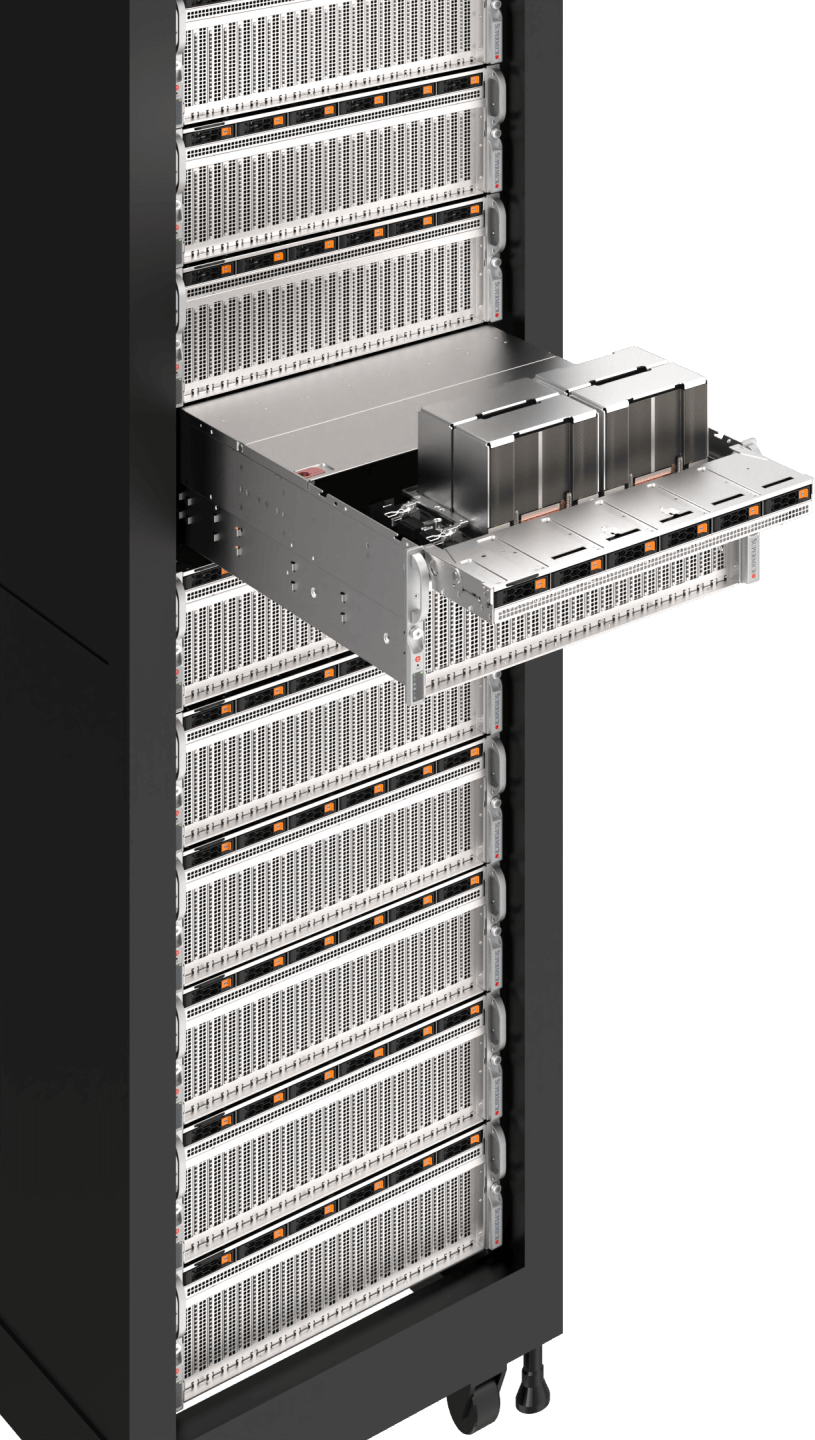

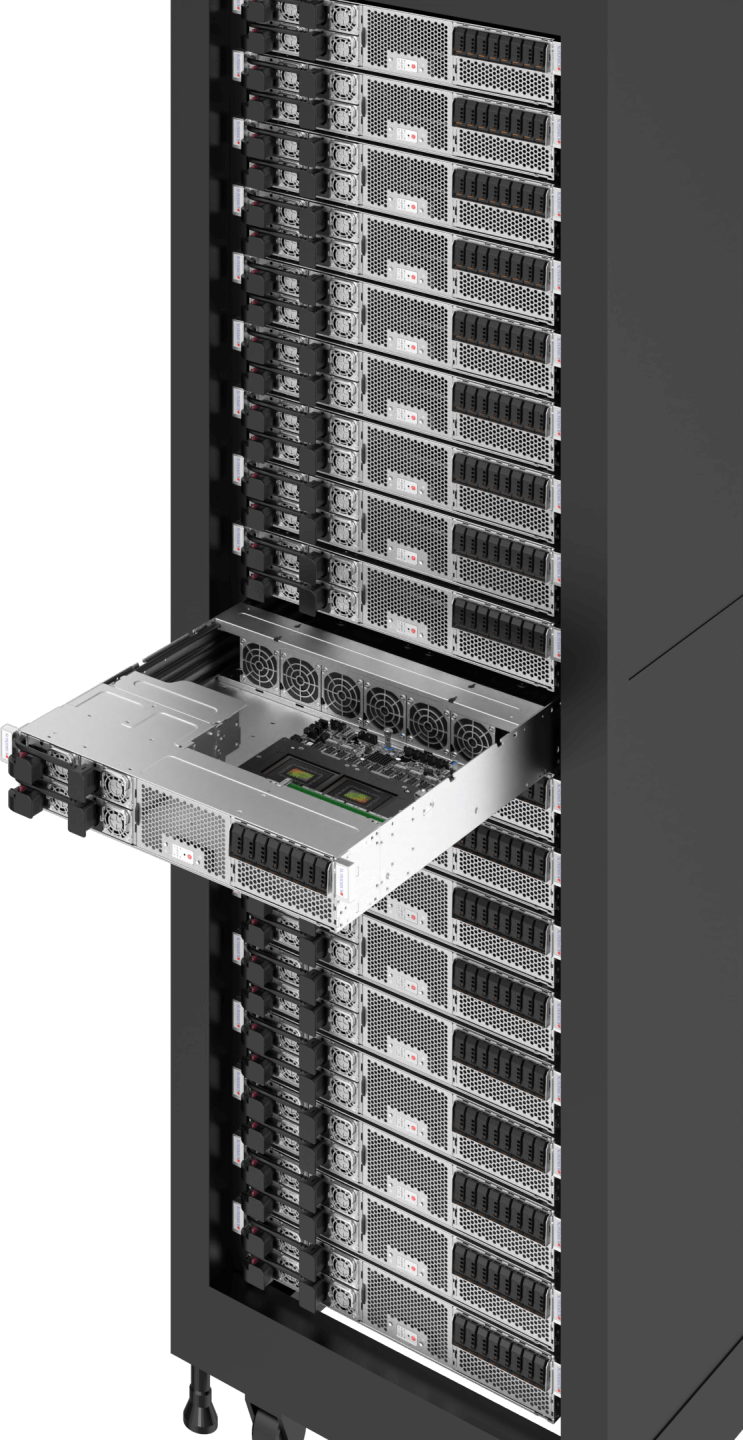

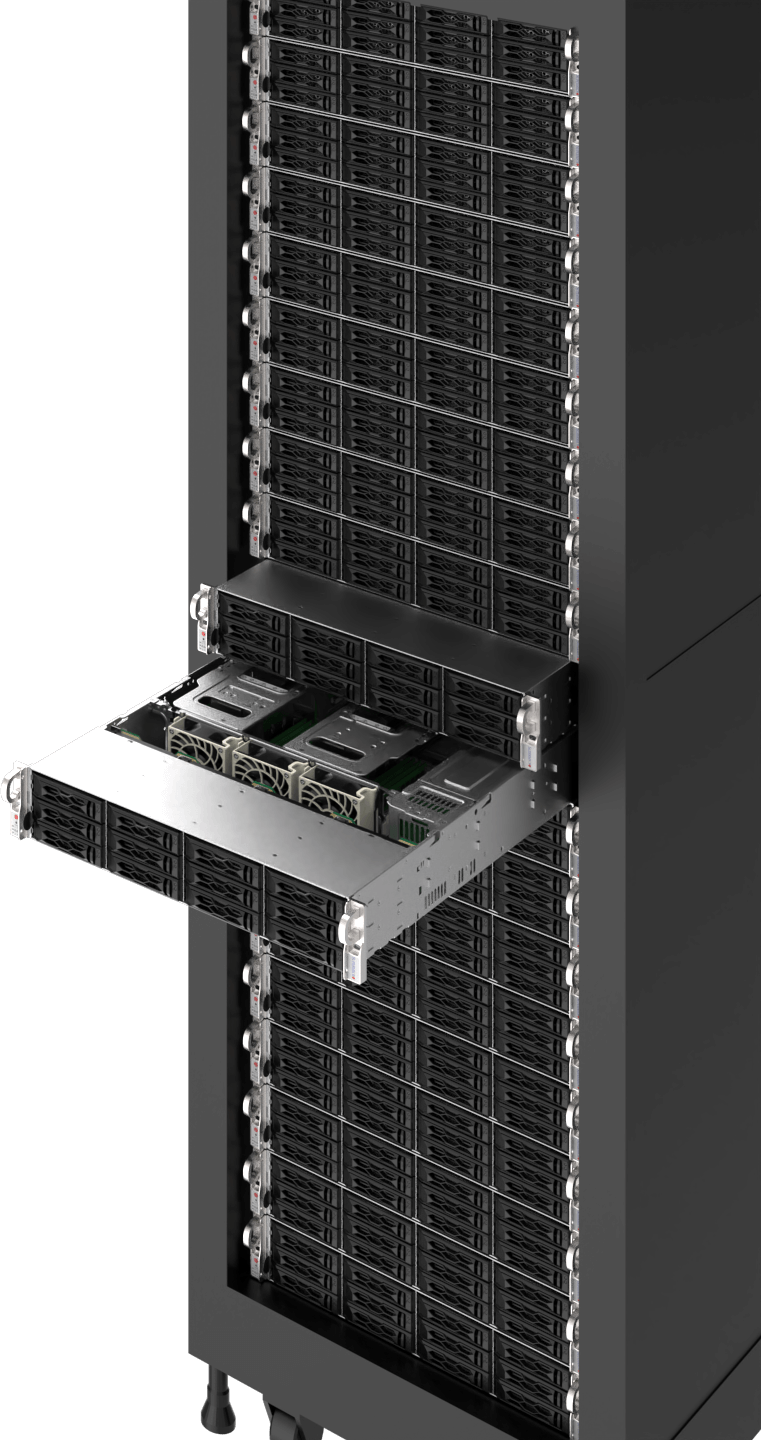

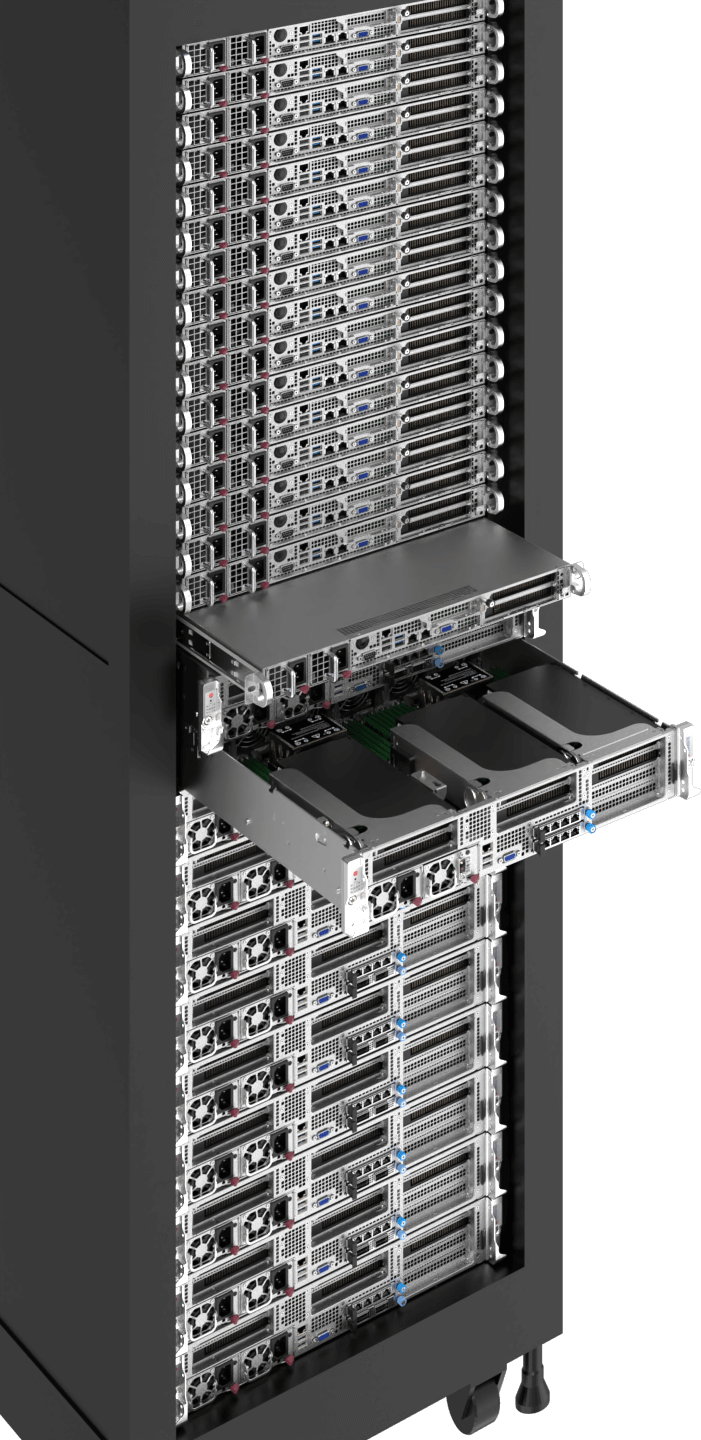

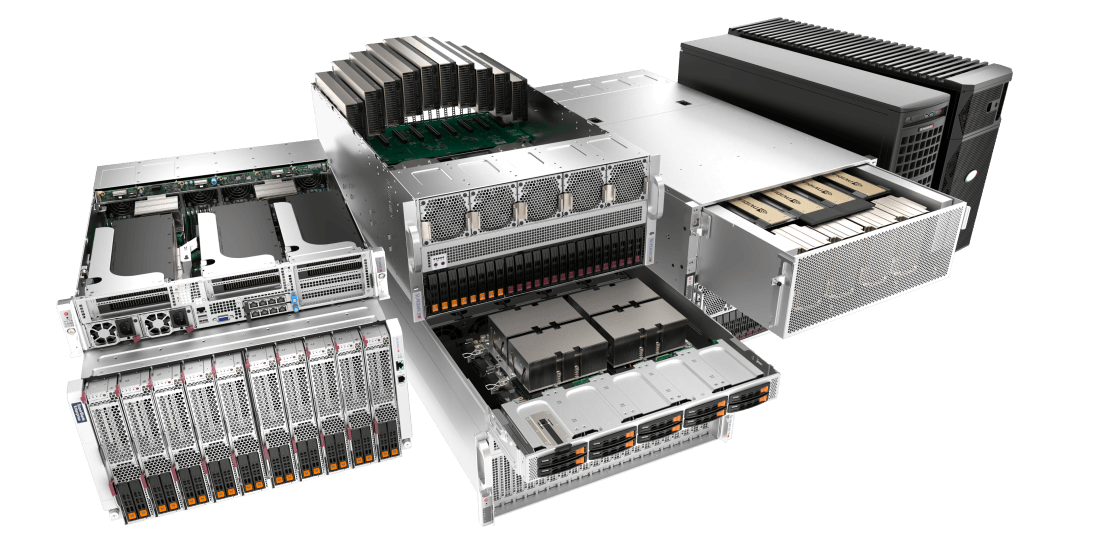

大规模人工智能训练需要最先进的技术来最大限度地发挥 GPU 的并行计算能力,以处理数十亿甚至数万亿个人工智能模型参数,并利用海量数据集进行训练。这些系统利用英伟达™(NVIDIA®)HGX™ B200和GB200 NVL72、带宽高达1.8TB/秒的最快NVLink®和NVSwitch® GPU-GPU互连,以及用于节点集群的每个GPU之间最快的1:1网络,经过优化,能够从头开始训练大型语言模型,并将其提供给数百万并发用户。通过全闪存 NVMe 来完善堆栈,以实现快速的人工智能数据管道,我们还提供了带液体冷却选项的全集成机架,以确保快速部署和流畅的人工智能培训体验。

工作量大小

- 特大号

- 大型

- 中型

- 存储

资源

高性能计算/人工智能

工程模拟、科学研究、基因组测序、药物发现

为了加快科学家、研究人员和工程师的发现速度,越来越多的高性能计算工作负载正在增强机器学习算法和GPU加速并行计算,以获得更快的结果。目前,世界上许多速度最快的超级计算集群都在利用 GPU 和人工智能的力量。

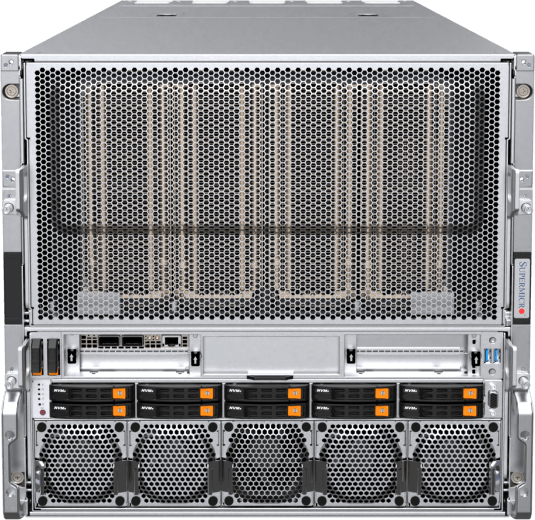

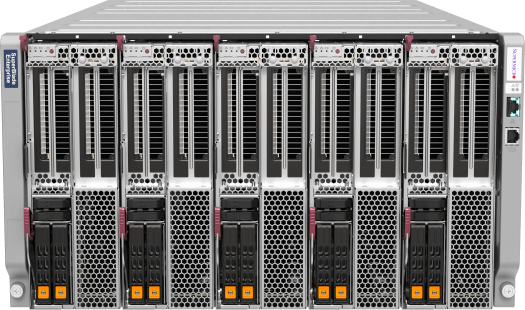

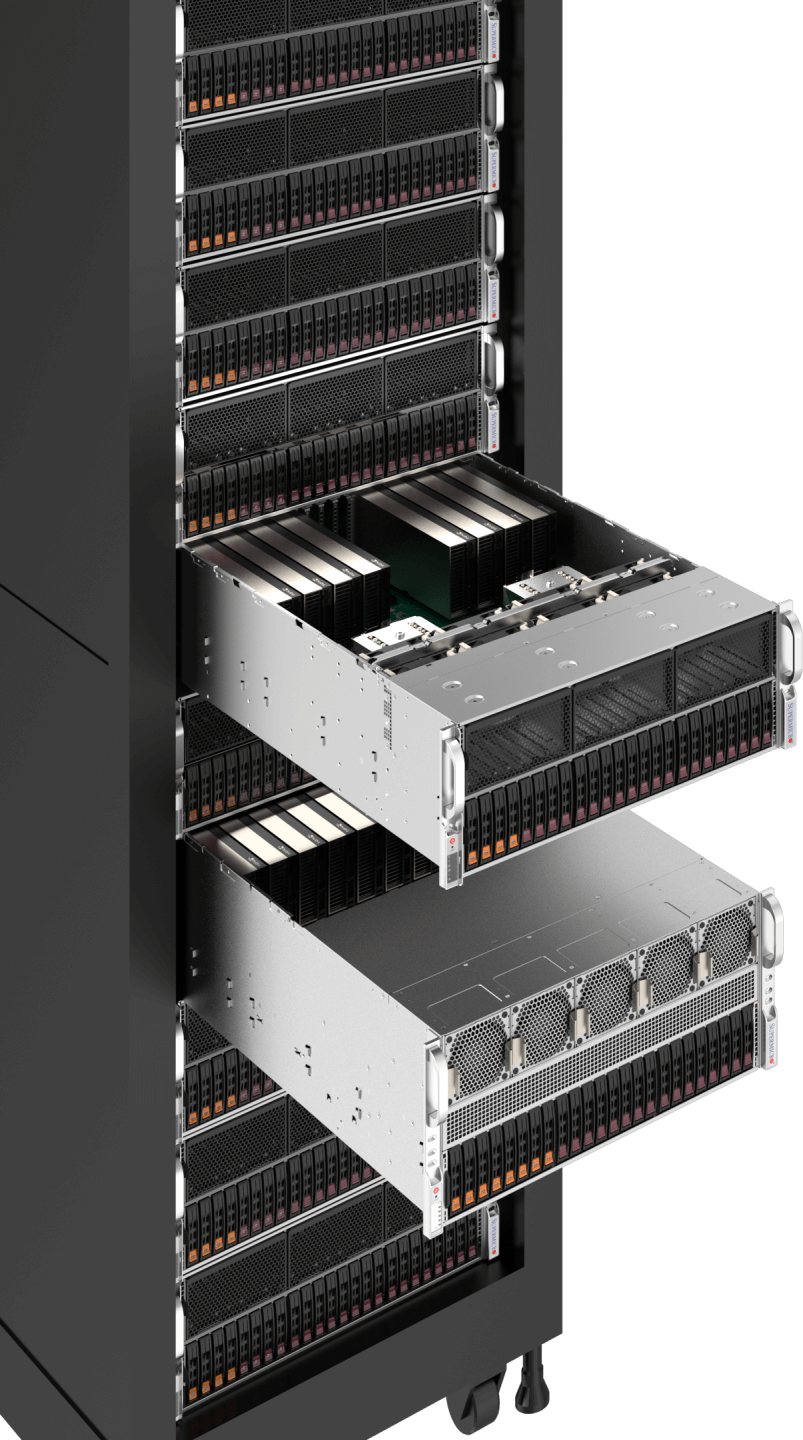

高性能计算工作负载通常需要处理海量数据集和高精度要求的数据密集型仿真与分析。NVIDIA的H100/H200等GPU提供前所未有的双精度性能,单GPU可达60万亿次浮点运算。Supermicro的高灵活性高性能计算平台支持在多种高密度机箱中配置大量GPU和CPU,并具备机架级集成与液冷技术。

工作量大小

- 大型

- 中型

资源

企业人工智能推理与训练

生成式人工智能推理、人工智能服务/应用、聊天机器人、推荐系统、业务自动化

生成式人工智能的崛起已被视为从科技到银行和媒体等各行各业的下一个前沿领域。作为孕育创新、显著提高生产力、简化运营、做出数据驱动型决策和改善客户体验的源泉,采用人工智能的竞赛已经开始。

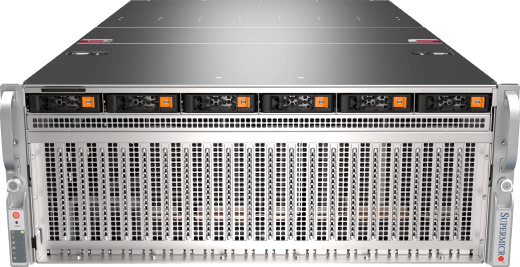

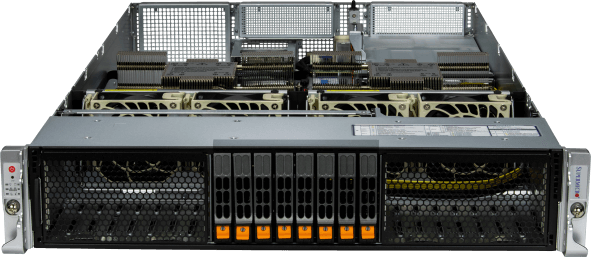

无论是人工智能辅助的应用程序和商业模式,还是用于客户服务的智能类人聊天机器人,抑或是协同代码生成和内容创作的人工智能,企业均可利用开放式框架、库及预训练人工智能模型,并结合自有数据集针对独特应用场景进行微调。 随着企业部署AI基础设施Supermicro多样化的GPU优化系统凭借开放式模块化架构、供应商灵活性及便捷的部署升级路径,助力企业快速适应技术演进。

工作量大小

- 特大号

- 大型

- 中型

资源

可视化与设计

实时协作、三维设计、游戏开发

现代 GPU 提高了三维图形和人工智能应用的保真度,加快了工业数字化的步伐,通过逼真的三维模拟改变了产品开发和设计流程、制造和内容创作,实现了新的质量高度、无机会成本的无限迭代以及更快的上市时间。

通过Supermicro的全集成解决方案(包括 4U/5U 8-10 GPU 系统、NVIDIA OVX™参考架构、针对 NVIDIA Omniverse Enterprise 优化的通用场景描述 (USD) 连接器以及通过 NVIDIA 认证的机架式服务器和多 GPU 工作站),大规模构建虚拟生产基础架构,加速工业数字化进程。

工作量大小

- 大型

- 中型

资源

内容交付与虚拟化

内容交付网络 (CDN)、转码、压缩、云游戏/流媒体

如今,视频交付工作负载仍占当前互联网流量的很大一部分。随着流媒体服务提供商越来越多地提供 4K 甚至 8K 格式的内容或刷新率更高的云游戏,必须利用媒体引擎进行 GPU 加速,以实现流媒体流水线的数倍吞吐量性能,同时借助 AV1 编码和解码等最新技术,减少所需数据量并获得更好的视觉保真度。

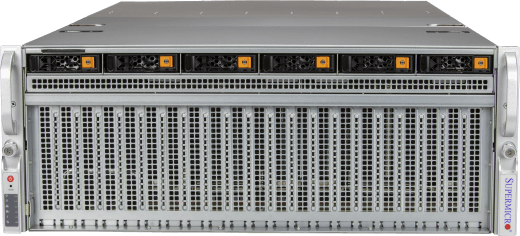

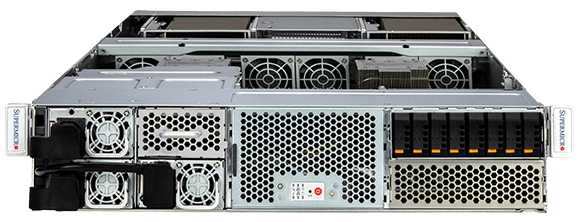

Supermicro公司的多节点和多 GPU 系统(如 2U 4 节点BigTwin®系统)可满足现代视频传输的严格要求,每个节点都支持英伟达™(NVIDIA®)L4 GPU,并能配备大量 PCIe Gen5 存储和网络速度,以驱动内容传输网络要求苛刻的数据通路。

工作量大小

- 大型

- 中型

- 小型

资源

边缘人工智能

边缘视频转码、边缘推理、边缘训练

在各行各业中,其员工和客户在城市、工厂、零售店、医院等边缘位置工作的企业正越来越多地投资于在边缘部署人工智能。通过在边缘处理数据并利用人工智能和 ML 算法,企业可以克服带宽和延迟限制,实现实时分析,以便及时做出决策、提供预测性护理和个性化服务,并简化业务运营。

专为边缘计算打造、环境优化Supermicro ,采用多种紧凑机箱设计,开箱即用即可提供低延迟开放架构所需的性能。其预集成组件、多样化的软硬件堆栈兼容性,以及满足复杂边缘部署需求的隐私与安全特性,全面满足各类应用场景。

工作量大小

- 特大号

- 大型

- 中型

- 小型

资源

COMPUTEX 2024 首席执行官主题演讲